For more than 60 years, fusion scientists have tried to use “magnetic bottles” of various shapes and sizes to confine extremely hot plasmas, with the goal of producing practical fusion energy. But turbulence in the plasma has, so far, confounded researchers’ ability to efficiently contain the intense heat within the core of the fusion device, reducing performance. Now, scientists have used one of the world’s largest supercomputers to reveal the complex interplay between two types of turbulence known to occur in fusion plasmas, paving the way for improved fusion reactor design.

Years of careful research have shown that fusion devices are plagued by plasma turbulence. The turbulence quickly pushes heat from the hot fusion core to the edge, cooling the plasma in the process and reducing the amount of fusion energy produced.

While turbulence has been identified as the culprit, the measured heat losses in many fusion devices are still commonly higher than scientists’ leading theories of turbulence. Thus, even after more than half a century of research, the origin of this “anomalous” heat loss in experimental fusion plasmas remained a mystery.

To tackle this problem, scientists had to create a model that captured the different types of turbulence known to exist in fusion plasmas. This turbulence can roughly be grouped into two categories: long wavelength turbulence and short wavelength turbulence.

Most prior research has assumed a dominant role for long wavelength turbulence, often neglecting short wavelength turbulence altogether. Although scientists were aware they were missing the contributions of the small eddies, simulation of all the turbulence together was too challenging to undertake…until now.

Using one of the world’s largest supercomputers (the NERSC Edison system) and experimental data obtained from the Alcator C-Mod tokamak, scientists from the University of California, San Diego and the Massachusetts Institute of Technology recently performed the most physically comprehensive simulations of plasma turbulence to date.

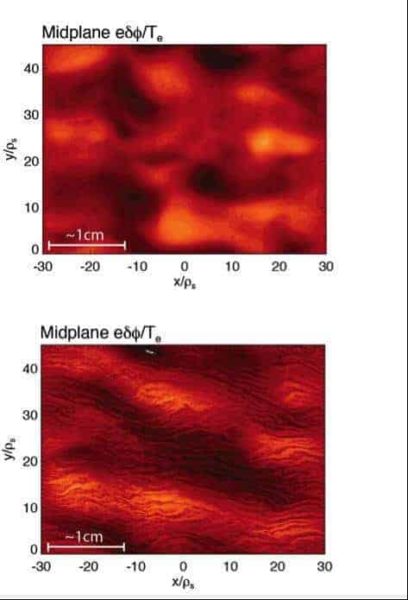

These simulations capture the spatial and temporal dynamics of long and short wavelength turbulence simultaneously, revealing never before observed physics phenomena. Contrary to many proposed theories, long wavelength turbulence was found to coexist with short wavelength turbulence, in the form of finger-like structures known as “streamers” (Figure 1).

In many experimental conditions, the large scale turbulent eddies were found to interact strongly with the short wavelength turbulence, transferring energy back and forth. Most significantly, these simulations demonstrated that interactions between long and short wavelength turbulence can increase heat losses tenfold above the standard model, matching a wide variety of experimental measurements, and likely explaining the mystery of “anomalous” heat loss in plasmas.

Pushing the limits of supercomputing capabilities has changed scientists’ understanding of how heat is pushed out of fusion plasmas by turbulence, and may help explain the 50-year-old mystery of “anomalous” heat loss. The study, currently submitted to the journal Nuclear Fusion, required approximately 100 million CPU hours to perform. For comparison, this is approximately the same as the latest MacBook Pro running for the next 3,000 years.

Ultimately, these results may be used to inform the design of fusion reactors, allowing for improved performance, and hopefully pushing us closer to the goal of practical fusion energy.