When you take a photo on a cloudy day with your average digital camera, the sensor detects trillions of photons. Photons, the elementary particles of light, strike different parts of the sensor in different quantities to form an image, with the standard four-by-six-inch photo boasting 1,200-by-1,800 pixels. Anyone who has attempted to take a photo at night or at a concert knows how difficult it can be to render a clear image in low light. However, in a study published in June 2016 in Nature Communications, one BU researcher has figured out a way to render an image while also measuring distances to the scene using about one photon per pixel.

“It’s natural to think of light intensity as a continuous quantity, but when you get down to very small amounts of light, then the underlying quantum nature of light becomes significant,” says Vivek Goyal, an associate professor of electrical and computer engineering at Boston University’s College of Engineering. “When you use the right kind of mathematical modeling for the detection of individual photons, you can make the leap to forming images of useful quality from extremely small amounts of detected light.”

Goyal’s study, “Photon-Efficient Imaging with a Single-Photon Camera,” was a collaboration with researchers at MIT and Politecnico di Milano. Funded by the National Science Foundation, it combined new image formation algorithms with the use of a single-photon camera to produce images from about one photon per pixel. The single-photon avalanche diode (SPAD) camera consisted of an array of 1,024 light-detecting elements, allowing the camera to make multiple measurements simultaneously to enable quicker, more efficient data acquisition.

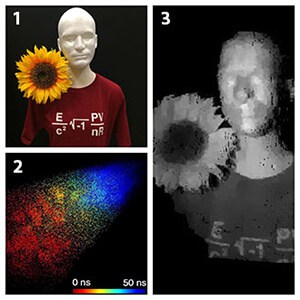

The experimental setup uses infrared laser pulses to illuminate the scene the research team wanted to capture, which is also illuminated by an ordinary incandescent light bulb to accurately reproduce the condition of having a strong competing light source that could be present in a longer-range scenario. Both the uninformative background light and laser light reflected back to the SPAD camera, which recorded the raw photon data with each pulse of the laser. A computer algorithm analyzed the raw data and used it to form an image of the scene. The result is a reconstructed image, cobbled together from single particles of light per pixel.

The method introduced by Goyal’s team comes in the wake of their earlier first-ever demonstration of combined reflectivity and depth imaging from a single photon per pixel. The earlier work used a single detector element with much finer time resolution. The current work demonstrates that creating an image with a single-photon detector can be done more efficiently.

“We are trying to make low-light imaging systems more practical, by combining SPAD camera hardware with novel statistical algorithms,” says Dongeek Shin, the lead author of the publication and a PhD student of Goyal at MIT. “Achieving this quality of imaging with very few detected photons while using a SPAD camera had never been done before, so it’s a new accomplishment in having both extreme photon efficiency and fast, parallel acquisition with an array.”

Though single-photon detection technology may not be common in consumer products any time soon, Goyal thinks this opens exciting possibilities in long-range remote sensing, particularly in mapping and military applications, as well as applications such as self-driving cars where speed of acquisition is critical. Goyal and his collaborators plan to continue to improve their methods, with a number of future studies in the works to address issues that came up during experimentation, such as reducing the amount of “noise,” or grainy visual distortion.

“Being able to handle more noise will help us increase range and allow us to work in daylight conditions,” says Goyal. “We are also looking at other kinds of imaging we can do with a small number of detected particles, like fluorescence imaging and various types of microscopy.”