Long relegated to scientific niches like astronomy and microscopy, sensors that catch just the minimum amount of light — a single photon at a time — could be better than typical digital cameras at capturing everyday memories in challenging environments.

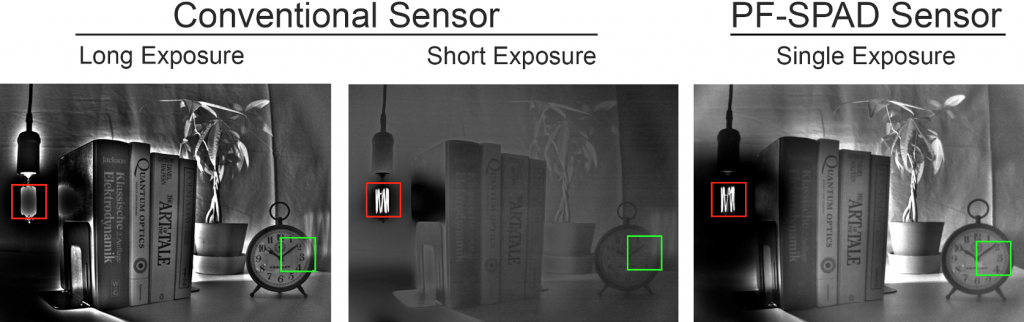

In a dark room or a motion-heavy scene, conventional cameras face a choice: a quick look that freezes movement nicely but turns out dark, or a longer exposure that captures more light but blurs moving parts.

“That’s always been a fundamental trade-off in any kind of photography,” says Mohit Gupta, a University of Wisconsin–Madison computer sciences professor. “But we are working on overcoming that trade-off with a different kind of sensor.”

Gupta’s lab in the School of Computing, Data and Information Sciences is collaborating with Professor Edoardo Charbon at the École Polytechnique Fédérale de Lausanne in Switzerland, an expert in packing together a type of single-photon sensors called single-photon avalanche diodes, or SPADs, in ever-larger arrays. The researchers are using SPADs for what they call quanta burst photography — taking many images in bursts, and then processing those many images to squeeze one good picture from a poorly lit or fast-moving subject. They will present their work during the SIGGRAPH 2020 conference, tentatively scheduled for August.

“You can think of each pixel of a camera as a light bucket which collects photons. Typical camera pixels need a lot of photons to make a reasonable image,” Gupta says. “But with a camera made from single-photon sensors, the bucket is more a collection of teaspoons that fill up as soon as they detect a minimum quantity of light.”

SPADs lend a camera two benefits: great sensitivity and remarkable speed. Theoretically, the single-photon teaspoons can catch light more than a million times a second. The SwissSPAD array used in the burst photography work is fast enough to record 100,000 single-photon frames per second.

Too much light can also be an issue for CCD (charge-coupled device) or CMOS (complementary metal-oxide semiconductor) sensors used in smartphones and digital cameras. More light striking the same area of a sensor can cause bright, white flares in images. And converting the presence and absence of photons into processable digital images introduces noise that can obscure details and muddle the smooth transition from light features to dark.

“Single-photon sensors, they kind of bypass this noisy, intermediate step of analog conversion,” Gupta says. “As the photon comes in, they directly sense this discrete quantity, thereby avoiding the extra noise.”

Images the researchers have made can capture the edge of a searing lightbulb filament while also clearly revealing the details of lettering on a shadowed plaque.

“The result is good image quality in low-light, with reduced motion blur, as well as a wide dynamic range,” says Gupta, whose work is supported by the Defense Advanced Research Projects Agency. “We have had good results even when the brightest spot in view is getting 100,000 times as much light as the darkest.”

Single-photon sensors have been used for decades to catch starlight or very low-light, high-speed, microscopic interactions within cells. They were not considered appropriate for general photography because the instruments needed to be cooled so much to work, and photons would pile up too fast under normal light.

Conventional camera sensors must choose between a long exposure to highlight dark features (like the clock, left) or a short exposure to mute bright objects (the lightbulb, center). Cameras using arrays of single-photon sensors are getting better at shooting bright and dark extremes simultaneously (right). IMAGE COURTESY OF MOHIT GUPTA

Gupta’s group, including graduate students and study co-authors Sizhuo Ma and Shantanu Gupta, has improved the management of the discrete photon measurements, while SPADs have become less cumbersome to operate.

“Our collaborators in Switzerland are at the forefront of making these sensors into higher-resolution arrays,” Gupta says. “They’ve shown you can fabricate them in the same processes as commercial CMOS sensors, and in a way that makes them operate at room temperature.”

While the SPADs chips could drop right into smartphones, the computing power and storage required to handle large bursts of information from the blazing-fast sensors still outstrips even the highest-performance handheld devices.

“We still need to develop engineering approaches to deal with this data deluge,” says Gupta, whose work has been submitted for patenting with the help of the Wisconsin Alumni Research Foundation. “With the right kind of hardware and the right algorithms, this could be in everyone’s pocket one day.”