Researchers are now investigating whether a commercially available gaming headset can be used to monitor brainwaves to produce speech so that users can control a speech device by simply imagining saying a word.

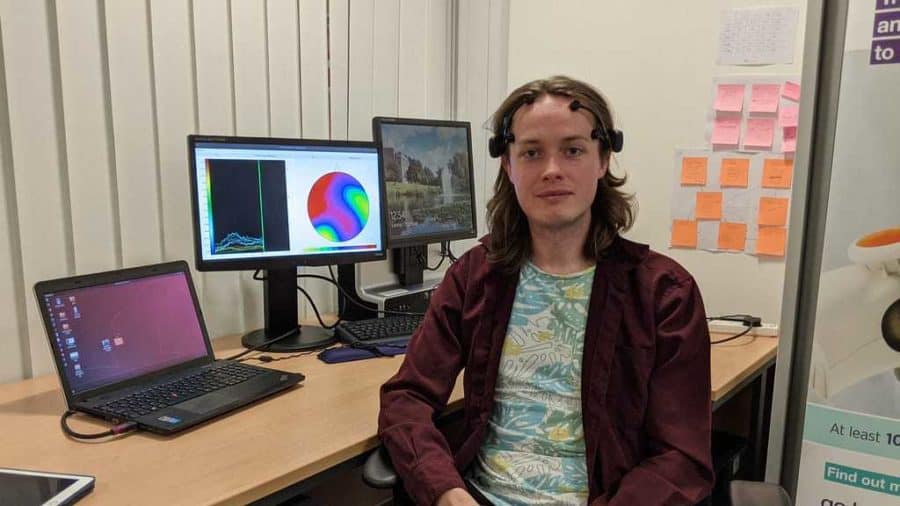

The headset uses an EEG (electroencephalography) system to detect brainwaves; these are processed by a computer that uses neural networks and deep learning to identify speech from all the other thoughts of the user.

So far, the system can detect up to 16 isolated English phonemes, or spoken sounds, and works to a comparable accuracy to bulky and expensive research-grade EEG machines.

Scott Wellington, now a PhD researcher at the University of Bath, started on the project whilst working at SpeakUnique, along with colleagues at the University of Edinburgh.

He’s now continuing his research at the University of Bath’s Centre for Doctoral Training in Accountable, Responsible and Transparent AI.

Scott and his collaborators previously developed a synthetic voice for rugby player Rob Burrow, who is losing his ability to speak due to Motor Neurone Disease (MND). They took data from Rob’s previous media appearances, including pitch-side interviews, and used cutting edge machine learning to recreate his voice synthetically.

Scott said: “A current constraint of existing Text-to-Speech systems like Rob Burrow uses, is that the user still has to type in what they want to say, for the device to then say it. As you can imagine, this can be inconvenient, slow, and a source of deep frustration for people with MND. My hope is that speech neuroprostheses may provide some answer to this.”

The team compared a lightweight EEG device for speech decoding with data from a research-grade device which has more electrodes but is more bulky and not practical to wear all day. Volunteers wore the device whilst a recording of their own speech was played to them, whilst they imagined saying the sound, and whilst they vocalised the sound.

The team tested several different machine learning models for speech classification with the EEG and have published the library of recordings so that it is freely available for other researchers to use.

Scott said: “This device doesn’t read your thoughts exactly – you have to consciously imagine saying the word for it to work, so users don’t have to worry about all of their private thoughts being vocalised.

“It works more like hyperpredictive text – it will be much quicker for the user to select the correct word they want to say from the list.

“The previous work I did as a Speech Scientist is by far the best thing I’ve ever done in my life, with real-world impact.

“It’s why I’m wanting to pursue developing brain-computer interfaces for speech, especially for people who have lost their speech due to MND, or similar neurodegenerative conditions.

“This field is very exciting and fast moving – I believe a solution is just around the corner.”

Scott presented his work at the Interspeech Virtual Conference on 29 October 2020.