SUMMARY: It would be ironic if today’s valid and timely call for a wide and rich variety of post-publication metrics — in place of just the unitary journal average (the “journal impact factor”) — were coupled with an ill-considered call for collapsing the planet’s wide and rich variety of peer-reviewed journals and their respective independent, established quality levels onto some sort of global, generic pass/fail system.

Patterson, Mark (2009) PLoS Journals – measuring impact where it matters wrotes:

“[R]eaders tend to navigate directly to the articles that are relevant to them, regardless of the journal they were published in… [T]here is a strong skew in the distribution of citations within a journal – typically, around 80% of the citations accrue to 20% of the articles… [W]hy then do researchers and their paymasters remain wedded to assessing individual articles by using a metric (the impact factor) that attempts to measure the average citations to a whole journal?

“We’d argue that it’s primarily because there has been no strong alternative. But now alternatives are beginning to emerge… focusing on articles rather than journals… [and] not confining article-level metrics to a single indicator… Citations can be counted more broadly, along with web usage, blog and media coverage, social bookmarks, expert/community comments and ratings, and so on…

“[J]udgements about impact and relevance can be left almost entirely to the period after publication. By peer-reviewing submissions purely for scientific rigour, ethical conduct and proper reporting before publication, articles can be assessed and published rapidly. Once articles have joined the published literature, the impact and relevance of the article can then be determined on the basis of the activity of the research community as a whole… [through] [a]rticle-level metrics and indicators…”

Merits of Metrics. Of course direct article and author citation counts are infinitely preferable to — and more informative than — just a journal average (the journal “impact factor”).

And yes, multiple postpublication metrics will be a great help in navigating, evaluating and analyzing research influence, importance and impact.

But it is a great mistake to imagine that this implies that peer review can now be done on just a generic “pass/fail” basis.

Purpose of Peer Review. Not only is peer review dynamic and interactive — improving papers before approving them for publication — but the planet’s 25,000 peer-reviewed journals differ not only in the subject matter they cover, but also, within a given subject matter, they differ (often quite substantially) in their respective quality standards and criteria.

It is extremely unrealistic (and would be highly dysfunctional, if it were ever made to come true) to suppose that these 25,000 journals are (or ought to be) flattened to provide a 0/1 pass/fail decision on publishability at some generic adequacy level, common to all refereed research.

Pass/Fail Versus Letter-Grades. Nor is it just a matter of switching all journals from assigning a generic pass/fail grade to assigning its own letter grade (A-, B+, etc.), despite the fact that that is effectively what the current system of multiple, independent peer-reviewed journals provides. For not only do journal peer-review standards and criteria differ, but the expertise of their respective “peers” differs too. Better journals have better and more exacting referees, exercising more rigorous peer review. (So the 25,000 peer-reviewed journals today cannot be thought of as one generic peer-review filter that accepts papers for publication in each field with grades between A+; rather there are A+ journals, B- journals, etc.: each established journal has its own independent standards, to which submissions are answerable)

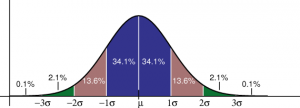

Track Records and Quality Standards. And users know all this, from the established track records of the journals they consult as readers and publish in as authors. Whether or not we like to put it that way, this all boils down to selectivity across a gaussian distribution of research quality in each field. There are highly selective journals, that accept only the very best papers — and even those often only after several rounds of rigorous refereeing, revision and re-refereeing. And there are less selective journals, that impose less exacting standards — all the way down to the fuzzy pass/fail threshold that distinguishes “refereed” journals from journals whose standards are so low that they are virtually vanity-press journals.

Supplement Versus Substitute. This difference (and independence) among journals in terms of their quality standards is essential if peer-review is to serve as the quality enhancer and filter that it is intended to be. Of course the system is imperfect, and, for just that reason alone (amongst many others) a rich diversity of post-publication metrics are an invaluable supplement to peer review. But they are certainly no substitute for pre-publication peer review, or, most importantly, its quality triage.

Quality Distribution. So much research is published daily in most fields that on the basis of a generic 0/1 quality threshold, researchers simply cannot decide rationally or reliably what new research is worth the time and investment to read, use and try to build upon. Researchers and their work differ in quality too, and they are entitled to know a priori, as they do now, whether or not a newly published work has made the highest quality cut, rather than merely that it has met some default standards, after which users must wait for the multiple post-publication metrics to accumulate across time in order to be able to have a more nuanced quality assessment.

Rejection Rates. More nuanced sorting of new research is precisely what peer review is about, and for, and especially at the highest quality levels. Although authors (knowing the quality track-records of their journals) mostly self-select, submitting their papers to journals whose standards are roughly commensurate with their quality, the underlying correlate of a journal’s refereeing quality standards is basically their relative rejection rate: What percentage of annual papers in their designated subject matter would meet their standards (if all were submitted to that journal, and the only constraint on acceptance were the quality level of the article, not how many articles the journal could manage to referee and publish per year)?

Quality Ranges. This independent standard-setting by journals effectively ranges the 25,000 titles along a rough letter-grade continuum within each field, and their “grades” are roughly known by authors and users, from the journals’ track-records for quality.

Quality Differential. Making peer review generic and entrusting the rest to post-publication metrics would wipe out that differential quality information for new research, and force researchers at all levels to risk pot-luck with newly published research (until and unless enough time has elapsed to sort out the rest of the quality variance with post-publication metrics). Among other things, this would effectively slow down instead of speeding up research progress.

Turn-Around Time. Of course pre-publication peer review takes time too; but if its result is that it pre-sorts the quality of new publications in terms of known, reliable letter-grade standards (the journals’ names and track-records), then it’s time well spent. Offloading that dynamic pre-filtering function onto post-publication metrics, no matter how rich and plural, would greatly handicap research usability and progress, and especially at its all-important highest quality levels.

More Value From Post-Publication Metrics Does Not Entail Less Value From Pre-Publication Peer Review. It would be ironic if today’s eminently valid and timely call for a wide and rich variety of post-publication metrics — in place of just the unitary journal average (the “journal impact factor”) — were coupled with an ill-considered call for collapsing the planet’s wide and rich variety of peer-reviewed journals and their respective independent, established quality levels onto some sort of global, generic pass/fail system.

Differential Quality Tags. There is an idea afoot that peer review is just some sort of generic pass/fail grade for “publishability,” and that the rest is a matter of post-publication evaluation. I think this is incorrect, and represents a misunderstanding of the actual function that peer review is currently performing. It is not a 0/1, publishable/unpublishable threshold. There are many different quality levels, and they get more exacting and selective in the higher quality journals (which also have higher-quality and more exacting referees and refereeing). Users need these differential quality tags when they are trying to decide whether newly published work is worth taking the time to ready and making the effort and risk to try to build upon (at the quality level of their own work).

User/Author/Referee Experience. I think both authors and users have a good idea of the quality levels of the journals in their fields — not from the journals’ impact factors, but from their content, and their track-records for content. As users, researchers read articles in their journals; as authors they write for those journals, and revise for their referees; and as referees they referee for them. They know that all journals are not equal, and that “peer-reviewed” can be done at a whole range of quality levels.

Metrics As Substitutes for User/Author/Referee Experience? Is there any substitute for this direct experience with journals (as users, authors and referees) in order to know what their peer-reviewing standards and quality level are? There is nothing yet, and no one can say yet whether there will ever be metrics as accurate as having read, written and refereed for the journals in question. Metrics might eventually provide an approximation, though we don’t yet know how close, and of course they only come after publication (well after).

Quality Lapses? Journal track records, user experiences, and peer review itself are certainly not infallible either, however; the usually-higher-quality journals may occasionally publish a lower-quality article, and vice versa. But on average, the quality of the current articles should correlate well with the quality of past articles. Whether judgements of quality from direct experience (as user/author/referee) will ever be matched or beaten by multiple metrics, I cannot say, but I am pretty sure they are not matched or beaten by the journal impact factor.

Regression on the Generic Mean? And even if multiple metrics do become as good a joint predictor of journal article quality as user experience, it does not follow that peer-review can then be reduced to generic pass/fail, with the rest sorted by metrics, because (1) metrics are journal-level, not article-level (though they can also be author-level) and, more important still, (2) if journal-differences are flattened to generic peer review, entrusting the rest to metrics, then the quality of articles themselves will fall, as rigorous peer review does not just just assign articles a differential grade (via the journal’s name and track-record), but it improves them, through revision and re-refereeing. More generic 0/1 peer review, with less individual quality variation among journals, would just generate quality regression on the mean.

REFERENCES

Bollen J, Van de Sompel H, Hagberg A, Chute R (2009) A Principal Component Analysis of 39 Scientific Impact Measures. PLoS ONE 4(6): e6022. doi:10.1371/journal.pone.0006022

Brody, T., Harnad, S. and Carr, L. (2006) <a href="http://eprints.ecs.soton.ac.uk/10713/" Earlier Web Usage Statistics as Predictors of Later Citation Impact. Journal of the American Association for Information Science and Technology (JASIST) 57(8) pp. 1060-1072.

Garfield, E., (1955) Citation Indexes for Science: A New Dimension in Documentation through Association of Ideas. Science 122: 108-111

Harnad, S. (1979) Creative disagreement. The Sciences 19: 18 – 20.

Harnad, S. (ed.) (1982) Peer commentary on peer review: A case study in scientific quality control, New York: Cambridge University Press.

Harnad, S. (1984) Commentaries, opinions and the growth of scientific knowledge. American Psychologist 39: 1497 – 1498.

Harnad, Stevan (1985) Rational disagreement in peer review. Science, Technology and Human Values, 10 p.55-62.

Harnad, S. (1990) Scholarly Skywriting and the Prepublication Continuum of Scientific Inquiry Psychological Science 1: 342 – 343 (reprinted in Current Contents 45: 9-13, November 11 1991).

Harnad, S. (1986) Policing the Paper Chase. (Review of S. Lock, A difficult balance: Peer review in biomedical publication.) Nature 322: 24 – 5.

Harnad, S. (1996) Implementing Peer Review on the Net: Scientific Quality Control in Scholarly Electronic Journals. In: Peek, R. & Newby, G. (Eds.) Scholarly Publishing: The Electronic Frontier. Cambridge MA: MIT Press. Pp 103-118.

Harnad, S. (1997) Learned Inquiry and the Net: The Role of Peer Review, Peer Commentary and Copyright. Learned Publishing 11(4) 283-292.

Harnad, S. (1998/2000/2004) The invisible hand of peer review. Nature [online] (5 Nov. 1998), Exploit Interactive 5 (2000): and in Shatz, B. (2004) (ed.) Peer Review: A Critical Inquiry. Rowland & Littlefield. Pp. 235-242.

Harnad, S. (2008) Validating Research Performance Metrics Against Peer Rankings. Ethics in Science and Environmental Politics 8 (11) Special Issue: The Use And Misuse Of Bibliometric Indices In Evaluating Scholarly Performance

Harnad, S. (2009) Open Access Scientometrics and the UK Research Assessment Exercise. Scientometrics 79 (1)

Shadbolt, N., Brody, T., Carr, L. and Harnad, S. (2006) The Open Research Web: A Preview of the Optimal and the Inevitable, in Jacobs, N., Eds. Open Access: Key Strategic, Technical and Economic Aspects. Chandos.

Comments are closed.