UC San Francisco scientists recently showed that brain activity recorded as research participants spoke could be used to create remarkably realistic synthetic versions of that speech, suggesting hope that one day such brain recordings could be used to restore voices to people who have lost the ability to speak. However, it took the researchers weeks or months to translate brain activity into speech, a far cry from the instant results that would be needed for such a technology to be clinically useful.

Now, in a complementary new study, again working with volunteer study subjects, the scientists have for the first time decoded spoken words and phrases in real time from the brain signals that control speech, aided by a novel approach that involves identifying the context in which participants were speaking.

“For years, my lab was mainly interested in fundamental questions about how brain circuits interpret and produce speech,” said speech neuroscientist Eddie Chang, MD, a professor of neurosurgery, Bowes Biomedical Investigator, and member of the Weill Institute for Neurosciences at UCSF. “With the advances we’ve seen in the field over the past decade it became clear that we might be able to leverage these discoveries to help patients with speech loss, which is one of the most devastating consequences of neurological damage.”

Patients who experience facial paralysis due to brainstem stroke, spinal cord injury, neurodegenerative disease, or other conditions may partially or completely lose their ability to speak. However, the brain regions that normally control the muscles of the jaw, lips, tongue, and larynx to produce speech are often intact and remain active in these patients, suggesting it could be possible to use these intentional speech signals to decode what patients are trying to say.

“Currently, patients with speech loss due to paralysis are limited to spelling words out very slowly using residual eye movements or muscle twitches to control a computer interface,” Chang explained. “But in many cases, information needed to produce fluent speech is still there in their brains. We just need the technology to allow them to express it.”

Context Improves Real-Time Speech Decoding

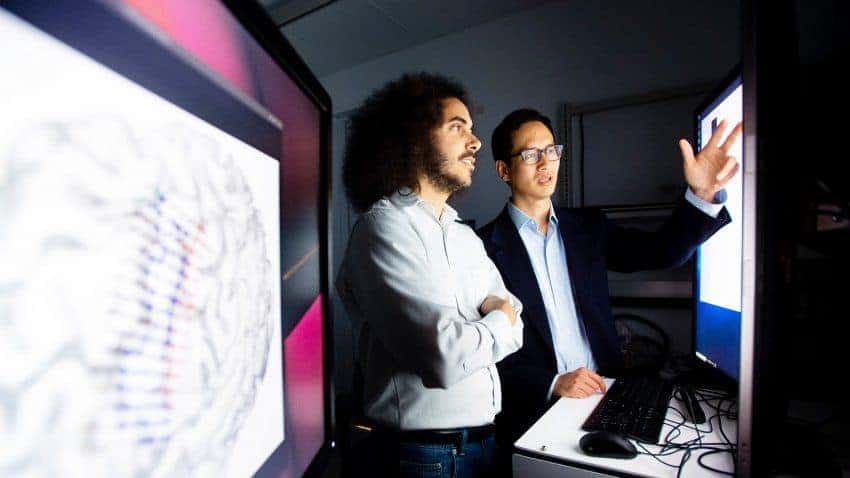

As a stepping stone towards such a technology, Chang’s lab has spent years studying the brain activity that controls speech with the help of volunteer research participants at the UCSF Epilepsy Center.

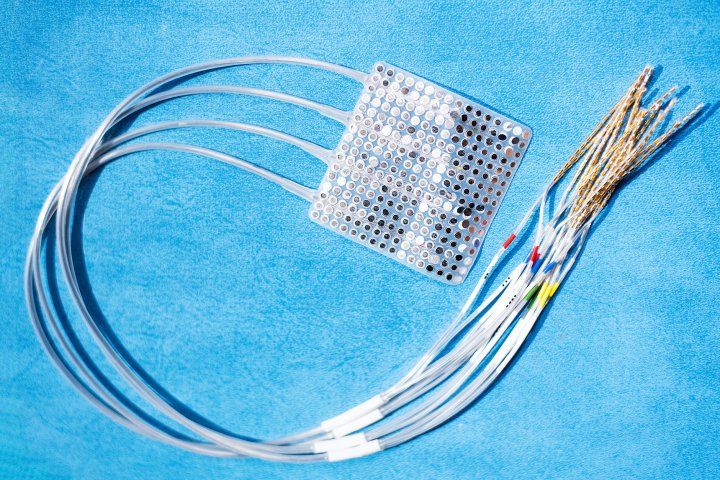

These patients – all of whom have normal speech – have had a small patch of tiny recording electrodes temporarily placed on the surface of their brains for a week or more to map the origins of their seizures in preparation for neurosurgery. This involves a technique called electrocorticography (ECoG), which provides much richer and more detailed data about brain activity than is possible with non-invasive technologies like EEG or fMRI. While they are in the hospital, some of these patients agree to let Chang’s group use the already-implanted ECoG electrodes as part of research experiments not directly related to their illness.

In the new study, published July 30 in Nature Communications, researchers from the Chang lab led by postdoctoral researcher David Moses, PhD, worked with three such research volunteers to develop a way to instantly identify the volunteers’ spoken responses to a set of standard questions based solely on their brain activity, representing a first for the field.

To achieve this result, Moses and colleagues developed a set of machine learning algorithms equipped with refined phonological speech models, which were capable of learning to decode specific speech sounds from participants’ brain activity. Brain data was recorded while volunteers listened to a set of nine simple questions (e.g. “How is your room currently?”, “From 0 to 10, how comfortable are you?”, or “When do you want me to check back on you?”) and responded out loud with one of 24 answer choices. After some training, the machine learning algorithms learned to detect when participants were hearing a new question or beginning to respond, and to identify which of the two dozen standard responses the participant was giving with up to 61 percent accuracy as soon as they had finished speaking.

“Real-time processing of brain activity has been used to decode simple speech sounds, but this is the first time this approach has been used to identify spoken words and phrases,” Moses said. “It’s important to keep in mind that we achieved this using a very limited vocabulary, but in future studies we hope to increase the flexibility as well as the accuracy of what we can translate from brain activity.”

One of the study’s key findings is that incorporating the context in which participants were speaking dramatically improved the algorithm’s speed and accuracy. Using volunteers’ brain activity to first identify which of the predefined questions they had heard – which the algorithm did with up to 75 percent accuracy – made it possible to significantly narrow down the range of likely answers, since each answer was only an appropriate response to certain questions.

“Most previous approaches have focused on decoding speech alone, but here we show the value of decoding both sides of a conversation – both the questions someone hears and what they say in response,” Chang said. “This reinforces our intuition that speech is not something that occurs in a vacuum and that any attempt to decode what patients with speech impairments are trying to say will be improved by taking into account the full context in which they are trying to communicate.”

First Attempt to Restore Speech in Clinic

Following a decade of advances in understanding the brain activity that normally controls speech, Chang’s group has recently set out to discover whether these advances could be used to restore communication abilities to paralyzed patients.