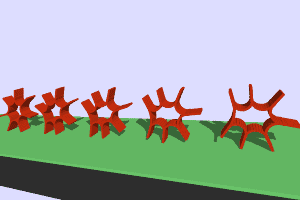

Motion picture animation and video games are impressively lifelike nowadays, capturing a wisp of hair falling across a heroine’s eyes or a canvas sail snapping crisply in the wind. Collaborators from the University of California, Los Angeles (UCLA) and Carnegie Mellon University have adapted this sophisticated computer graphics technology to simulate the movements of soft, limbed robots for the first time.

The findings were published May 6 in Nature Communications in a paper titled, “Dynamic Simulation of Articulated Soft Robots.”

“We have achieved faster than real-time simulation of soft robots, and this is a major step toward such robots that are autonomous and can plan out their actions on their own,” said study author Khalid Jawed, an assistant professor of mechanical and aerospace engineering at UCLA Samueli School of Engineering. “Soft robots are made of flexible material which makes them intrinsically resilient against damage and potentially much safer in interaction with humans. Prior to this study, predicting the motion of these robots has been challenging because they change shape during operation.”

Movies often use an algorithm named discrete elastic rods (DER) to animate free-flowing objects. DER can predict hundreds of movements in less than a second. The researchers wanted to create a physics engine using DER that could simulate the movements of bio-inspired robots and robots in challenging environments, such as the surface of Mars or underwater.

Another algorithm-based technology, finite elemental method (FEM), can simulate the movements of solid and rigid robots but it is not well-suited to tackle the intricacies of soft, natural movements. It also requires significant time and computational power.

Until now, roboticists have used a painstaking trial-and-error process for investigating the dynamics of soft material systems, design and control of soft robots.

“Robots made out of hard and inflexible materials are relatively easy to model using existing computer simulation tools,” said Carmel Majidi, an associate professor of mechanical engineering in Carnegie Mellon’s College of Engineering. “Until now, there haven’t been good software tools to simulate robots that are soft and squishy. Our work is one of the first to demonstrate how soft robots can be successfully simulated using the same computer graphics software that has been used to model hair and fabrics in blockbuster films and animated movies.”

The researchers started working together in Majidi’s Soft Machines Lab more than three years ago. Continuing their collaboration on this latest work, Jawed ran the simulations in his research lab at UCLA while Majidi performed the physical experiments performed the physical experiments that validated the simulation results.

The research was funded in part by the Army Research Office.

“Experimental advances in soft-robotics have been outpacing theory for several years,” said Dr. Samuel Stanton, a program manager with the Army Research Office, an element of the U.S. Army Combat Capabilities Development Command’s Army Research Laboratory. “This effort is a significant step in our ability to predict and design for dynamics and control in highly deformable robots operating in confined spaces with complex contacts and constantly changing environments.”

The researchers are currently working to apply this technology to other kinds of soft robots, such as ones inspired by the movements of bacteria and starfish. Such swimming robots could be fully untethered and used in oceanography to monitor seawater conditions or inspect the status of fragile marine life.

The new simulation tool can significantly reduce the time it takes to bring a soft robot from drawing board to application. While robots are still very far from matching the efficiency and capabilities of natural systems, computer simulations can help to reduce this gap.

The co-first authors on the paper are UCLA’s Weicheng Huang and Carnegie Mellon’s Xiaonan Huang.

Huang, W., Huang, X., Majidi, C. et al. Dynamic simulation of articulated soft robots. Nat Commun 11, 2233 (2020). DOI: 10.1038/s41467-020-15651-9