Scientific research has changed dramatically in the centuries since Galileo, Newton and Darwin. Whereas scientists once often toiled in isolation with homemade experiments and treatises, today collaboration is the norm. Teams of scientists now routinely pool and process reams of data gleaned from high-tech instruments.

Yet as modern science has grown in sophistication and delivered amazing breakthroughs, a worrying trend has emerged. Confounded scientists have demonstrated that a large number of studies cannot be successfully replicated, even when using the same methods as the original research. This “reproducibility crisis” has particularly impacted the fields of psychology and medicine, throwing into question the validity of many original findings.

Now, a new study, published May 20 in the journal Nature and co-led by Stanford researchers, is underscoring one particular factor in the reproducibility crisis: the increasingly complex and flexible ways that experimental data can be analyzed. Simply put, no two groups of researchers are necessarily crunching data the same way. And with so much data to get through and so many ways to process it, researchers can arrive at totally different conclusions.

In the first-of-its-kind study, 70 independent research teams from around the world were given a common analysis challenge to tackle. All were presented with the same data – brain scans of volunteers performing a monetary decision-making task – and told to test out nine different hypotheses. Each team analyzed the data differently.

Ultimately, the teams’ results varied dramatically for five out of those nine hypotheses, with some reporting a positive result and others a negative result. “The main concerning takeaway from our study is that, given exactly the same data and the same hypotheses, different teams of researchers came to very different conclusions,” said paper co-senior author Russell Poldrack, the Albert Ray Lang Professor of Psychology in the School of Humanities and Sciences. He also co-leads an international project called the Neuroimaging Analysis, Replication and Prediction Study (NARPS), which conducted the experiment comparing data analysis techniques.

While worrisome, Poldrack said the findings can help researchers assess and improve the quality of their data analyses moving forward. Potential solutions include ensuring that data is analyzed in multiple ways, as well as making data analysis workflows transparent and openly shared among researchers.

“We really want to know when we have done something wrong so we can fix it,” said Poldrack. “We’re not hiding from or covering up the bad news.”

The winding road of analysis

The new study centered on a type of neuroimaging called functional magnetic resonance imaging, or fMRI. The technique measures blood flow in the brain as study participants perform a task. Higher levels of blood flow indicate neural activity in a brain region. In this way, fMRI lets researchers probe which areas of the brain are involved in certain behaviors, as well as the experiencing of emotions, the intricacies of memory storage and much more.

The initial NARPS data consisted of fMRI scans of 108 individuals, obtained by the research group of Tom Schonberg at Tel Aviv University. Study participants engaged in a sort of simulated gambling experiment, developed by Poldrack and colleagues in previous research. The fMRI scans showed brain regions, particularly those involved in reward processing, changing their activity in relation to the amount of money that could be won or lost on each gamble. But extrapolating from the collected brain scans to clear-cut results proved to be anything but straightforward.

“The processing you have to go through from raw data to a result with fMRI is really complicated,” said Poldrack. “There are a lot of choices you have to make at each place in the analysis workflow.”

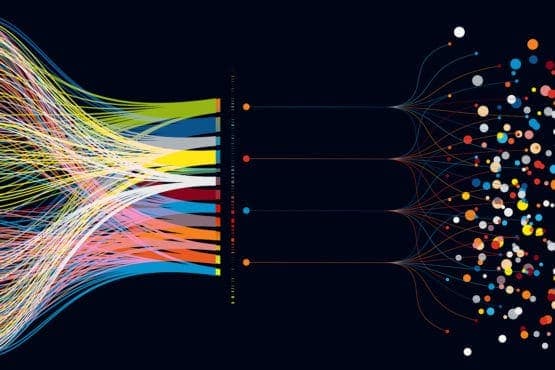

The new study dramatically demonstrated this analytical flexibility. After receiving the large neuroimaging dataset, shared across the world using the resources of the Stanford Research Computing Center, each research team went down its own winding road of analysis. Right out of the gate, teams modeled the hypothesis tests in differing ways. The teams also used different kinds of software packages for data analysis. Preprocessing steps and techniques likewise varied from team to team. Furthermore, the research groups set different thresholds for when parts of the brain showed significantly increased activation or not. The teams could not even always agree on how to define anatomical regions of interest in the brain when applying statistical analysis.

In the end, the 70 research teams mostly agreed on four hypotheses about whether there was a significant activation effect or not in a certain brain region amongst study participants. Yet for the remaining five, the teams mostly disagreed.

Ever better

Poldrack hopes the NARPS study can serve as a valuable bit of reckoning, not just for the neuroimaging community, but other scientific fields with similarly complex workflows and broad possibilities for how different analysis steps are implemented.

“We think that any field with similarly complex data and methods would show similar variability in analyses done side-by-side of the same dataset,” said Poldrack.

The problem highlighted by the new study could become even more pervasive in the future, as the datasets that fuel many scientific discoveries grow ever larger in size. “Our NARPS work highlights the fact that as data has gotten so big, analysis has become a real issue,” said Poldrack.

One encouraging and important takeaway from NARPS, though, is the dedication shown by its research teams in getting to the roots of the reproducibility crisis. For the study, almost 200 individual researchers willingly put in tens or even hundreds of hours into a critical self-assessment.

“We want to test ourselves as severely as possible, and this is an example of many researchers spending altogether thousands of person-hours to do that,” said Poldrack. “It shows scientists fundamentally care about making sure what we are doing is right, that our results will be reproducible and reliable, and that we are getting the right answers.”

Poldrack is also a member of Stanford Bio-X and the Wu Tsai Neurosciences Institute. Other Stanford co-authors include Susan Holmes, a professor of statistics; former research associate Krzysztof Gorgolewski; postdoctoral researchers Leonardo Tozzi and Rui Yuan; and PhD student Claire Donnat. About 200 researchers from 100 institutions worldwide contributed to the study. Tom Schonberg, an assistant professor at Tel Aviv University, led the project with Rotem Botvinik-Nezer, his former PhD student at Tel Aviv University and now a postdoctoral researcher at Dartmouth College.

Multiple researchers who worked on the study received funding from various international organizations. Hosting of the data on OpenNeuro, an open science neuroinformatics database started by Poldrack, was supported by the National Institutes of Health.

To read all stories about Stanford science, subscribe to the biweekly Stanford Science Digest.