Music is an indispensable element in film: it establishes atmosphere and mood, drives the viewer’s emotional reactions, and significantly influences the audience’s interpretation of the story.

In a recent paper published in PLOS One, a research team at the USC Viterbi School of Engineering, led by Professor Shrikanth Narayanan, sought to objectively examine the effect of music on cinematic genres. Their study aimed to determine if AI-based technology could predict the genre of a film based on the soundtrack alone.

“By better understanding how music affects the viewer’s perception of a film, we gain insights into how film creators can reach their audience in a more compelling way,” said Narayanan, University Professor and Niki and Max Nikias Chair in Engineering, professor of electrical and computer engineering and computer science and the director of USC Viterbi’s Signal Analysis and Interpretation Laboratory (SAIL).

The notion that different film genres are more likely to use certain musical elements in their soundtrack is rather intuitive: a lighthearted romance might include rich string passages and lush, lyrical melodies, while a horror film might instead feature unsettling, piercing frequencies and eerily discordant notes.

But while past work qualitatively indicates that different film genres have their own sets of musical conventions — conventions that make that romance film sound different from that horror movie — Narayanan and team set out to find quantitative evidence that elements of a film’s soundtrack could be used to characterize the film’s genre.

Narayanan and team’s study was the first to apply deep learning models to the music used in a film to see if a computer could predict the genre of a film based on the soundtrack alone. They found that these models were able to accurately classify a film’s genre using machine learning, supporting the notion that musical features can be powerful indicators in how we perceive different films.

According to Timothy Greer, Ph.D. student at USC Viterbi in the department of computer science who worked with Narayanan on the study, their work could have valuable applications for media companies and creators in understanding how music can enhance other forms of media. It could give production companies and music supervisors a better understanding of how to create and place music in television, movies, advertisements, and documentaries in order to elicit certain emotions in viewers.

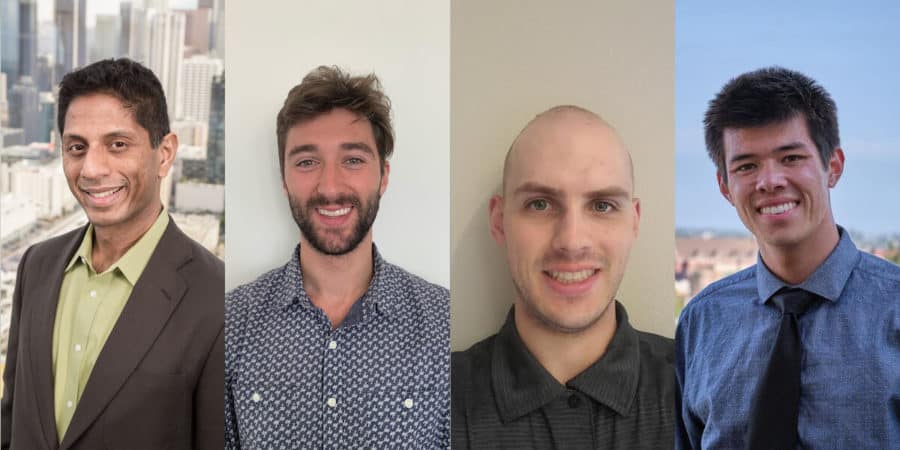

In addition to Narayanan and Greer, the research team for the study included Dillon Knox, a Ph.D. student in the department of electrical and computer engineering, and Benjamin Ma, who graduated from USC in 2021 with a B.S. in computer science, a master’s in computer science, and a minor in music production. (Ma was also named one of the two 2021 USC Schwarzman Scholars.) The team worked within the Center for Computational Media Intelligence, a research group in SAIL.

Predicting Genre From Soundtrack

In their study, the group examined a dataset of 110 popular films released between 2014 and 2019. They used genre classification listed on the Internet Movie Database (IMDb), to label each film as action, comedy, drama, horror, romance, or science-fiction, with many of the films spanning more than one of these genres.

Next, they applied a deep learning network that extracted the auditory information, like timbre, harmony, melody, rhythm, and tone from the music and score of each film. This network used machine learning to analyze these musical features and proved capable of accurately classifying the genre of each film based on these features alone.

The group also interpreted these models to determine which musical features were most indicative of differences between genres. The models didn’t give specifics as to which types of notes or instruments were associated with each genre, but they were able to establish that tonal and timbral features were most important in predicting the film’s genre.

“Laying this groundwork is really exciting because we can now be more precise in the kinds of questions that we want to ask about how music is used in film,” said Knox. “The overall film experience is very complicated and being able to computationally analyze its impact and the choices and trends that go into its construction is very exciting.”

Future Directions

Narayanan and his team examined the auditory information from each film using a technology known as audio fingerprinting, the same technology that enables services like Shazam to identify songs from a database by listening to recordings, even when there are sound effects or other background noise present. This technology allowed them to look at where the musical cues happen in a film and for how long.

“Using audio fingerprinting to listen to all of the audio from the film allowed us to overcome a limitation of previous film music studies, which usually just looked at the film’s entire soundtrack album without knowing if or when songs from the album appear in the film,” said Ma. In the future, the group is interested in taking advantage of this capability to study how music is used in specific moments in a film and how musical cues dictate how the narrative of the film evolves over its course.

“With the ever-increasing access to both film and music, it has never been more crucial to quantitatively study how this media affects us,” Greer said. “Understanding how music works in conjunction with other forms of media can help us devise better viewing experiences and make art that’s moving and impactful.”