Researchers have trained a robotic ‘chef’ to watch and learn from cooking videos, and recreate the dish itself.

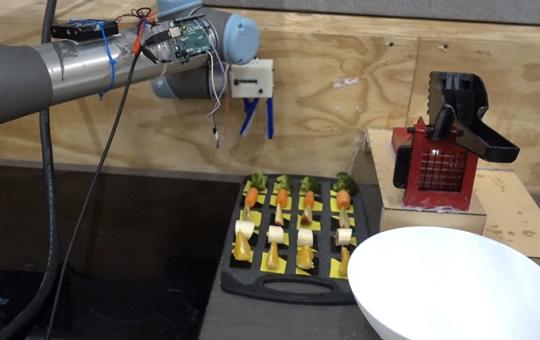

The researchers, from the University of Cambridge, programmed their robotic chef with a ‘cookbook’ of eight simple salad recipes. “After watching a video of a human demonstrating one of the recipes, the robot was able to identify which recipe was being prepared and make it,” according to the study.

In addition, the videos helped the robot incrementally add to its cookbook. “At the end of the experiment, the robot came up with a ninth recipe on its own,” the researchers explained. The results, reported in the journal IEEE Access, demonstrate how video content can be a valuable and rich source of data for automated food production, and could enable easier and cheaper deployment of robot chefs.

“Human cooks can learn new recipes through observation, whether that’s watching another person cook or watching a video on YouTube, but programming a robot to make a range of dishes is costly and time-consuming,” said Grzegorz Sochacki, the paper’s first author and a PhD candidate in Professor Fumiya Iida’s Bio-Inspired Robotics Laboratory at Cambridge.

To train the robot chef, the researchers devised eight simple salad recipes and filmed themselves making them. They then used a publicly available neural network to train the robot. “The neural network had already been programmed to identify a range of different objects, including the fruits and vegetables used in the eight salad recipes (broccoli, carrot, apple, banana and orange),” the researchers explained.

Using computer vision techniques, the robot analyzed each frame of video and was able to identify different objects and features, such as a knife and the ingredients, as well as the human demonstrator’s arms, hands, and face. “By correctly identifying the ingredients and the actions of the human chef, the robot could determine which of the recipes was being prepared,” the researchers stated.

Of the 16 videos it watched, the robot recognized the correct recipe 93% of the time, even though it only detected 83% of the human chef’s actions. The robot was also able to detect variations in a recipe, such as making a double portion or normal human error, without considering them as new recipes. Moreover, it recognized a new, ninth salad recipe, added it to its cookbook, and successfully made it.

“It’s amazing how much nuance the robot was able to detect,” said Sochacki. “These recipes aren’t complex – they’re essentially chopped fruits and vegetables, but it was really effective at recognizing, for example, that two chopped apples and two chopped carrots is the same recipe as three chopped apples and three chopped carrots.”

The researchers highlighted that the robot was not designed to follow the fast-paced, visually impressive food videos popular on social media. “Our robot isn’t interested in the sorts of food videos that go viral on social media – they’re simply too hard to follow,” Sochacki explained. “But as these robot chefs get better and faster at identifying ingredients in food videos, they might be able to use sites like YouTube to learn a whole range of recipes.”

The research was supported in part by Beko plc and the Engineering and Physical Sciences Research Council (EPSRC), part of UK Research and Innovation (UKRI).