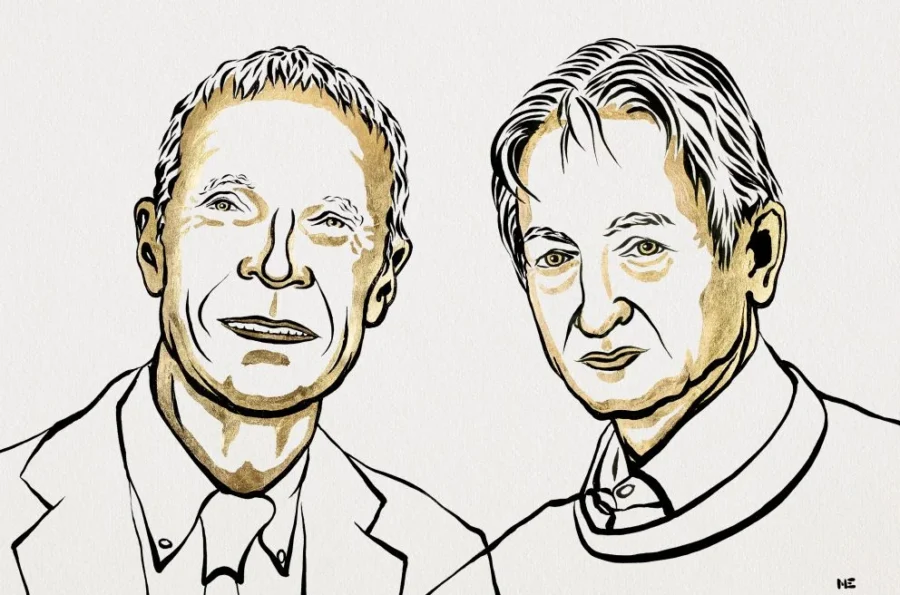

Two physicists have been awarded the 2024 Nobel Prize in Physics for their foundational work in machine learning and artificial neural networks. John J. Hopfield of Princeton University and Geoffrey E. Hinton of the University of Toronto were recognized for applying physics principles to create powerful machine learning techniques that underpin much of today’s artificial intelligence technology.

Summary: Hopfield and Hinton honored for using physics to develop machine learning methods that form the basis of modern AI systems.

Estimated reading time: 6 minutes

The Royal Swedish Academy of Sciences announced today that it has awarded the 2024 Nobel Prize in Physics to John J. Hopfield and Geoffrey E. Hinton “for foundational discoveries and inventions that enable machine learning with artificial neural networks.”

Hopfield and Hinton’s work in the 1980s laid the groundwork for the explosive growth of machine learning and artificial intelligence that we see today. By applying concepts from physics to computer science, they created new ways for machines to learn patterns and make decisions, paving the way for technologies like image recognition, natural language processing, and autonomous systems.

From Brain to Machine: The Rise of Artificial Neural Networks

Artificial neural networks, the backbone of many modern AI systems, take inspiration from the structure of the human brain. In these networks, artificial neurons (represented by nodes with different values) are connected by adjustable links that mimic synapses in the brain. As the network is trained on data, these connections are strengthened or weakened, allowing the system to learn and adapt.

Hopfield’s major contribution came in the form of an innovative network design that could store and recreate patterns, such as images. His approach drew on physics principles used to describe the behavior of atomic spins in materials. The Hopfield network assigns an energy-like value to saved patterns, with lower energy corresponding to more stable, recognizable images.

“When the Hopfield network is fed a distorted or incomplete image, it methodically works through the nodes and updates their values so the network’s energy falls,” explains the Royal Swedish Academy of Sciences. This process allows the network to reconstruct complete images from partial or corrupted inputs, mimicking the brain’s ability to recall memories from fragmentary cues.

Building on Foundations: The Boltzmann Machine

Geoffrey Hinton built upon Hopfield’s work, developing a new type of network called the Boltzmann machine. This innovation brought tools from statistical physics into the realm of machine learning, allowing networks to autonomously discover important features in data.

Hinton’s Boltzmann machine can be trained to recognize characteristic elements in various types of data. This capability makes it useful for tasks like image classification or generating new examples of patterns it has learned. The Boltzmann machine’s ability to extract meaningful features from complex data sets has proven invaluable in numerous applications, from computer vision to natural language processing.

Impact Beyond Computer Science

The work of Hopfield and Hinton has had far-reaching implications across multiple scientific disciplines. Ellen Moons, Chair of the Nobel Committee for Physics, highlighted this impact, stating, “The laureates’ work has already been of the greatest benefit. In physics we use artificial neural networks in a vast range of areas, such as developing new materials with specific properties.”

The techniques developed by Hopfield and Hinton have found applications in fields as diverse as drug discovery, climate modeling, and astronomical data analysis. Their work demonstrates the power of interdisciplinary research, showing how concepts from one scientific domain can revolutionize another.

Looking to the Future

As artificial intelligence continues to advance at a rapid pace, the foundational work of Hopfield and Hinton remains more relevant than ever. Their contributions have enabled the development of increasingly sophisticated AI systems that can process vast amounts of data, recognize complex patterns, and make decisions with human-like capabilities.

The Nobel Prize recognition underscores the importance of basic research in driving technological innovation. As we look to the future, it’s clear that the principles established by these physicists will continue to shape the evolution of machine learning and artificial intelligence for years to come.

Quiz

- What physical concept did John Hopfield use as inspiration for his neural network design?

- What type of network did Geoffrey Hinton develop based on Hopfield’s work?

- According to Ellen Moons, in what area of physics are artificial neural networks being used?

Answers:

- Atomic spin in materials

- The Boltzmann machine

- Developing new materials with specific properties

Further Reading

Glossary of Terms

- Artificial Neural Network: A computing system inspired by biological neural networks, consisting of interconnected nodes that process and transmit information.

- Hopfield Network: A type of recurrent artificial neural network capable of storing and reconstructing patterns.

- Boltzmann Machine: A type of stochastic recurrent neural network invented by Geoffrey Hinton, capable of learning internal representations.

- Machine Learning: A subset of artificial intelligence that focuses on the development of algorithms and statistical models that enable computer systems to improve their performance on a specific task through experience.

- Statistical Physics: A branch of physics that uses probability theory to study the behavior of systems composed of a large number of particles.

- Synapses: The structures that allow neurons to pass signals to other neurons in biological neural networks.

Enjoy this story? Get our newsletter! https://scienceblog.substack.com/