Engineers at the University of California, San Diego, have developed a new family of methods to significantly increase the speed of time-resolved numerical simulations in computational grand challenge problems. Such problems often arise from the high-resolution approximation of the partial differential equations governing complex flows of fluids or plasmas. The breakthrough could be applied to simulations that include millions or billions of variables, including turbulence simulations.

Modern computers are generally built from commodity hardware developed for serving and surfing the web. When applied to cutting-edge problems in scientific computing, computers built from such general-purpose hardware usually spend most of their time moving data around in memory, and the hardware dedicated to floating point computations (that is, the actual addition and multiplication of numbers) spends most of its time idle. The small memory footprint of the new schemes developed at UC San Diego means that numerical problems of a given size will run much faster on a given computer, and that even larger numerical problems may be considered.

“Moving information around in memory is the bottleneck in almost all large-scale numerical simulations today,” said Thomas Bewley, a mechanical engineering professor who leads the Flow Control Lab at the Jacobs School of Engineering at UC San Diego. “The remarkable feature of the new family of schemes developed in this work is that they require significantly less memory in the computer for a given size simulation problem than existing high-order methods of the same class, while providing excellent numerical stability, accuracy, and computational efficiency.”

Complex systems such as flows of fluids and plasmas generally evolve as a result of a combination of physical effects, such as diffusion and convection. Some of these effects are linear and incorporate many spatial derivatives (that is, they are characterized by a large range of characteristic time scales, and are thus referred to as “stiff”). These terms are best handled with “implicit” methods, which require the solution of many simple simultaneous equations using matrix algebra and iterative solvers. Other effects are nonlinear and incorporate fewer spatial derivatives (that is, they are characterized by a smaller range of characteristic time scales, and are thus referred to as “nonstiff”). These terms are most easily handled with explicit methods, which treat the propagation of each equation independently. If the stiff terms are treated with explicit methods, a severe restriction arises on the timestep, which slows the simulation; if the nonstiff terms are treated with implicit methods, complex and computationally expensive iterative solvers must be used.

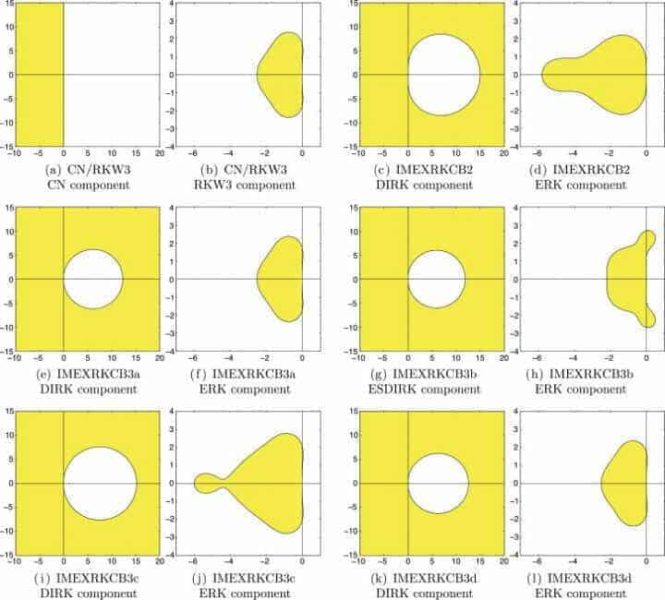

The new “implicit/explicit” or IMEX time marching schemes developed at UC San Diego thus marry together two algorithms for time-resolved simulations of the standard “Runge-Kutta” or RK form. The implicit algorithm is applied to the stiff terms of the problem, and the explicit algorithm is applied to the nonstiff terms of the problem. The two algorithms so joined are each endowed with good numerical properties, such as excellent stability and high accuracy, and, notably, maintain this high accuracy when working together in concert. The compatible pairs of simulation methods so developed are known as IMEXRK schemes.

“Searching for the right combination of the dozens of parameters that make these new IMEXRK algorithms work well was like finding a needle in a haystack, and required a tedious search over a very large parameter space, combined with the delicate codification of various numerical intuition to simplify the search. It took almost one year to complete,” said Daniele Cavaglieri, a Ph.D. student and co-author of the paper.

Researchers describe the new methods in the January 2015 issue of the Journal of Computational Physics.