Army researchers are improving how computers manage a myriad of images, which will help analysts across the DOD intelligence community.

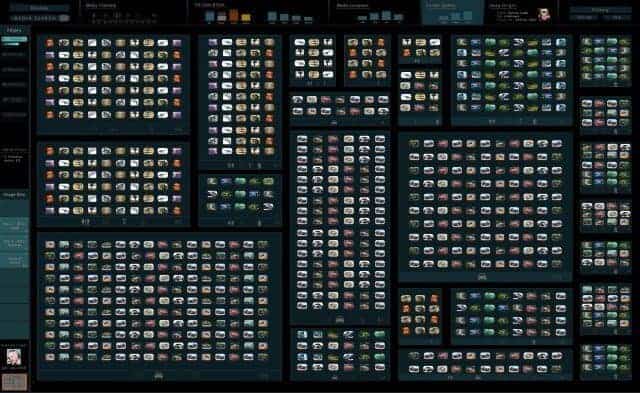

In a new user interface developed for the Defense Advanced Research Projects Agency, or DARPA, the U.S. Army Research Laboratory’s Dr. Jeff Hansberger designed and created a system that facilitates the visualization, navigation and manipulation of tens of thousands of images.

Hansberger works at the U.S. Army Research Laboratory, or ARL Human Research and Engineering Directorate field element at Redstone Arsenal in Alabama. DARPA selected his design earlier this year for its Visual Media Reasoning, or VMR.

“That is quite an achievement for Jeff and another example of our superior science and engineering staff and the great work we are doing for the Soldier,” said Dr. Thomas Russell, ARL director.

The DARPA VMR system aids intelligence analysts in searching, filtering, and exploring visual media through the use of advanced computer vision and reasoning techniques.

“The goal of DARPA’s VMR program is to extract mission-relevant information, such as the who, what, where and when, from visual media captured from our adversaries and to turn unstructured, ad hoc photos and video into true visual intelligence,” Hansberger said. “Our adversaries frequently use video, still and cell phone cameras to document their training and operations and occasionally post this content to widely available websites. The volume of this visual media is growing rapidly and is quickly outpacing our ability to review, let alone analyze, the contents of every image.”

Hansberger, who has been with ARL for 13 years, joined the DARPA VMR project initially to lead the intel analyst user group to assess and analyze their needs and requirements to communicate that back to the VMR developers. By conducting interviews, site visits of their work environment and task analyses, he said he began to identify the fundamental components of their task and understand the biggest challenges they faced.

“I discovered that like many people in other domains, the Intel analysts we had interacted with also adhered to what is called the ‘visual information seeking mantra,’ which is that people looking within and for visual information prefer to have 1) an overview first, then 2) zoom and filter through the information and retrieve 3) details on demand,” Hansberger said. “Based on this knowledge and my past experience of tailoring information and data visualizations for users, I took the initiative to create a user interface for VMR.”

Because Hansberger wanted the design to be completely driven by the analysts, their task, and the information they interact with, he said he initially ignored specific technology constraints that any one or several organizations might have and began to explore how people naturally interact with collections of physical photographs and how computers have attempted to replicate or re-interpret the manipulation and viewing of images and collections of images.

“Currently, the most effective and alluring way to interact with digital images is through touchscreen technology primarily because it shares some relationship to the ways we interact with physical images. Interacting with images through a touchscreen monitor allows direct manipulation such as Apple’s pinch zoom (similar to pulling a photograph closer to our eyes to zoom and get details on demand), and the ability to organize and arrange images in a large digital space that mimics a physical space (similar to creating an album or image collage),” Hansberger said. “Touchscreen technology and the use of zooming, similar to how Google Maps utilizes zooming across physical spaces was therefore selected as a primary design element of the user interface.”

Later in the development of VMR, Hansberger redesigned the touchscreen user interface for use with a traditional keyboard and mouse in order to make it easier for organizations to adopt the system immediately without having to purchase new hardware.

“With this revised design, I was invited along with two other competing user interface design teams to create a prototype to present to the VMR program manager and several organizations of the Intel community. In less than three months, two undergraduate students from the local university, the University of Alabama at Huntsville and myself created not one, but two working prototypes — the touchscreen and the keyboard and mouse versions of my design,” said Hansberger. “It felt like we were underdogs in a sense, creating these prototypes with less experienced programmers in less time than our competitors. A lot of credit goes out to the two students on the team, Chris Bero and Jared McArthur who juggled their normal coursework with the effort to create very convincing prototypes. After a successful demonstration of the prototypes, one analyst said, “I would take that interface up to my desk today and start using it.”

Undergraduate student Chris Bero, said he gained a real appreciation of user interface research during his involvement with the project.

“Working on this project for Dr. Hansberger has been an amazing opportunity to dive into new programming languages and further my knowledge of others. The ‘keyboard and mouse’ prototype explored a subset of Dr. Hansberger’s incredible system for tackling visual data representation; while working on it I found myself learning an entirely new scripting language to pair with formatting markup I first used in high school,” Bero said. “Through this program I’ve also gained an appreciation for the vibrant and essential space of user interface research, where the titan task of innovating beyond the status quo while retaining intuitive design is addressed.”

Hansberger said the user interface he developed provides two main benefits, speed and pattern detection support. As for speed, he said most failed examples of interfaces and user frustrations stem from forcing users to navigate too broadly or too deeply. This results in users getting lost within the menus, forcing them to create elaborate mental maps of the hierarchy, and spending valuable time navigating instead of completing their task. By creating a very flat interaction hierarchy and using the power of zoom to navigate, a great deal of time and frustration is saved. Computer models of Intel analysts using this new touchscreen interface predict the potential to save significant amounts of time compared to their current user interface.

For pattern detection support, Hansberger said one of our human strengths is our ability to detect patterns within information and data. “Part of my job is to design for those cognitive strengths, and in the case of Intel analysts, it’s to facilitate their ability to visualize and detect patterns,” Hansberger said. “To do that, we created what I call visual diagrams made from the images themselves to highlight patterns and relationships across the attributes that analysts focus on such as time and the physical location the images were taken.”

Hansberger said, “The more care and effort we spend in understanding the questions users will be asking and how we can craft the information in the interface to answer those questions, the easier their job will be. In the end, that’s what it’s all about; I try to put more effort in the design of the information and interface so the Soldier spends less time thinking about the software and more time accomplishing their mission.”

When he’s not building and designing things from pixels at work, the married father of two said he enjoys building things with more natural materials such as wood and especially stone, and lately he has been exploring 3-D printing with his son and daughter. He said he also savors exploring and experiencing new places with his family.