Plagiarism – reprinting another person’s work word-for-word without credit — is a firing offense in most workplaces and can easily get a college student suspended or even expelled from school.

But what about so-called ‘self-plagiarism,’ reusing your own words?

It depends, says a group of scholars who have spent years studying text recycling in STEM fields and developing clear guidelines specifically for researchers.

“There’s nothing clear and authoritative out there,” said Duke professor of the practice Cary Moskovitz, leader of the multi-institution, National Science Foundation-funded project, and director of writing in the disciplines at Duke’s Thompson Writing Program.

Each field and each scientific journal seems to have its own, often unwritten, rules about text recycling. “There was no established standard for practice, no central authority and a lot of underlying consternation,” Moskovitz said. “It’s the hangnail of publishing ethics.”

Drawing on their extensive research, and with input from experts in publishing and research ethics, the Text Recycling Research Project has now produced the sort of guidance authors and editors have been craving.

On June 25, the group released its first official TRRP documents: a set of Best Practices for Researchers , two guides to understanding text recycling—one for researchers and one for editors—and a white paper on text recycling in research writing under U.S. copyright law. The group is currently working on a set of model text recycling policies for publishers.

The group’s bottom line is that it’s likely okay to reuse some of the methods, and perhaps some of the introduction, in a scientific paper, but “the results and the discussion should be fresh,” Moskovitz said. And transparency—with editors and readers—is key.

Other commonly accepted practices include reprising some of the background written for a grant proposal in a paper resulting from the grant and reusing material from a conference poster in a journal article.

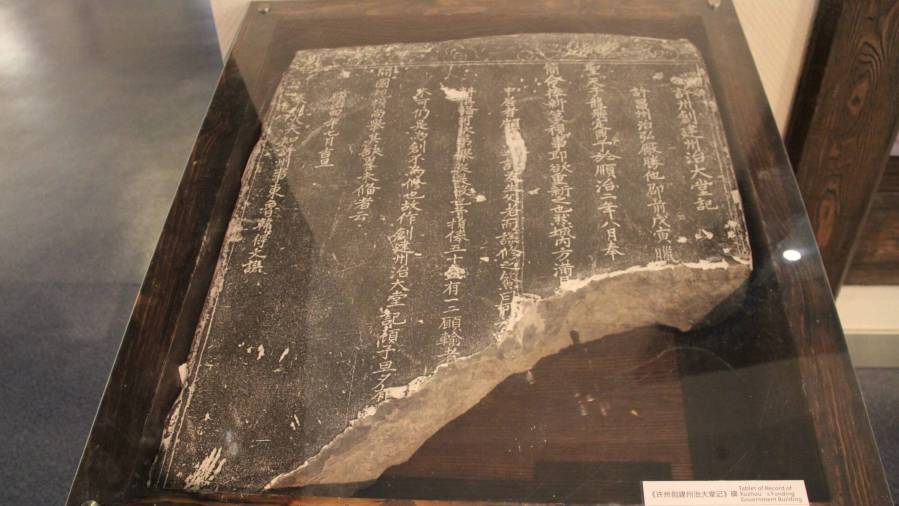

Plagiarism probably goes back to clay tablets, but text analysis software available today makes it much easier to find repeated material across time and space. A search for ‘self-plagiarism’ on the web turns reams of dire warnings, many of them produced by the makers of plagiarism-checker software that professors use to scan term papers, such as Turnitin, quetext and iThenticate, with what amounts to a zero-tolerance policy.

But in a March article in Inside Higher Ed, Moskovitz and co-author Aaron Colton, a lecturer in the Thompson Writing Program, single out a hard-line and highly influential “white paper” published by iThenticate in 2011 that they say is “a misrepresentation of the realities and ethics of academic research – and a guide that leads to worse, not better, writing.”

In response to the essay, the head of marketing for iThenticate committed to taking down the white paper and reworking the company’s approach to text recycling.

A zero-tolerance policy against text recycling doesn’t serve the needs of science or scientists very well, Moskovitz argues. There is an important distinction between a research team repeating parts of their literature review in the interest of accuracy and effort and an unethical scientist cutting and pasting from the work of other authors.

For example, the methods section of a science paper is the recipe part: ‘We added this amount of substance 1 to a beaker of substance 2, added 15 nanoliters of this special enzyme, baked it for 90 minutes at 35 Centigrade, and then spun it in a centrifuge.’ The recipe is precise, so why not repeat it word-for-word to ensure accuracy from one paper to the next? What would be the point of scrambling the order of elements and substituting a synonym or two to avoid the appearance of copying?

The Text Recycling Research Project’s guidance is based in part on survey research about the beliefs and attitudes of editors and editorial boards, in-depth interviews with about two dozen academic editors, and a survey of expert and novice researchers in STEM disciplines.

The team also examined published papers with their own text analysis software to better understand what recycling actually looks like in journals today. “It is rare to find papers with no recycling,” Moskovitz said.

The project also examines the legal analysis of text recycling in copyright and contract law. Finding only found one paper on the topic, they are doing their own legal analysis. David Hansen, Director of Copyright and Scholarly Communications for Duke Libraries and Mitch Yelverton, Senior Research Agreements Manager with the Duke University School of Medicine, are collaborating on the legal aspects. Additional white papers, on contract law and international copyright law, are underway.

To help clarify what is and what isn’t appropriate practice, the researchers found that they had to develop a new taxonomy of text recycling. They came up with four kinds:

“Developmental Recycling” includes reusing some text from a grant proposal or conference poster in a journal article. This one’s usually okay.

“Generative Recycling,” when parts of a published piece are used to make a new piece. This may be okay, but it depends on the nature of the material (e.g., is it methods or results) and quantity.

“Adaptive Publishing” includes translating one’s published research paper into another language or reusing the main content of an article in a book chapter. This might be okay, so long as the author gets permission from the copyright holder and the new publisher knowingly agrees.

Category four is “Duplicate Publishing,” republishing something that is essentially the same as a work you’ve already published. That’s just not allowed.

To learn more, visit the Text Recycling Project.