As artificial intelligence reshapes the landscape of biological research, scientists are sounding the alarm on potential biosecurity risks. A new policy forum published in Science highlights the urgent need for improved governance and safety evaluations of AI models in biology.

Doni Bloomfield and colleagues argue that current voluntary safety measures fall short of addressing the dual-use nature of advanced biological AI models. These powerful tools, capable of accelerating drug discovery and enhancing crop resilience, could also be misused to create dangerous pathogens.

The Double-Edged Sword of Biological AI

Biological AI models offer tremendous potential across various fields. From speeding up vaccine development to improving agricultural yields, these tools promise to revolutionize how we approach complex biological challenges.

However, the same versatility that makes these models so valuable also poses significant risks. A system designed to create viral vectors for gene therapy could, in the wrong hands, be used to engineer a novel pathogen capable of evading existing vaccines.

Dr. Jane Smith, a biosecurity expert not involved in the study, explains: “The line between beneficial research and potential misuse is often blurry in biology. AI models amplify both the potential benefits and risks, making robust governance crucial.”

Calls for Mandatory Safety Evaluations

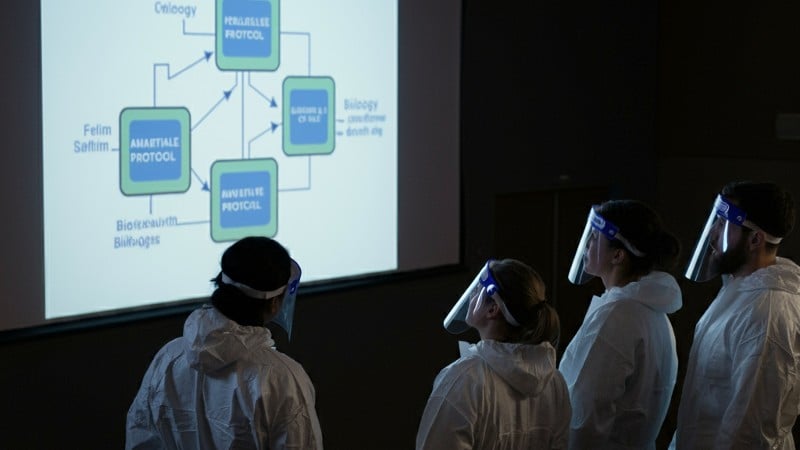

While some developers have committed to assessing the dual-use risks of their models, Bloomfield and his team argue that these voluntary measures are insufficient. They propose a more comprehensive approach:

1. National legislation mandating pre-release safety evaluations for advanced biological AI models

2. Standardized risk assessment frameworks based on existing dual-use research guidelines

3. Proxy tests to evaluate potential dangers without directly synthesizing harmful pathogens

4. Oversight of model weight releases to prevent unauthorized modifications

5. Responsible data sharing practices and restricted access to high-risk AI systems

“We propose that national governments, including the United States, pass legislation and set mandatory rules that will prevent advanced biological models from substantially contributing to large-scale dangers, such as the creation of novel or enhanced pathogens capable of causing major epidemics or even pandemics,” the authors write.

The team emphasizes that these policies should focus on high-risk, advanced AI models to avoid stifling beneficial research. By striking a balance between safety and scientific freedom, they aim to foster responsible innovation in the field.

Current policy measures, such as the White House Executive Order on AI and the Bletchley Declaration from the 2023 UK AI Safety Summit, represent steps in the right direction. However, the authors argue that a more unified and targeted approach is necessary to address the unique challenges posed by biological AI models.

Why it matters: As AI continues to transform biological research, the potential for misuse grows exponentially. Implementing robust governance measures now could prevent catastrophic outcomes while allowing scientists to harness the full potential of these powerful tools for the benefit of humanity.