Researchers at Imperial College London have found that humans display sympathy towards and protect AI bots who are excluded from social interactions, even in a simple virtual ball game. This unexpected behavior sheds new light on how people perceive and interact with artificial intelligence agents.

Summary: A study using a virtual ball game reveals humans tend to treat AI agents as social beings, showing sympathy and protective behavior towards excluded AI bots. This finding has implications for AI design and human psychology.

Estimated reading time: 5 minutes

In an era where artificial intelligence is becoming increasingly integrated into our daily lives, understanding how humans interact with AI is crucial. A recent study from Imperial College London has provided fascinating insights into this relationship, suggesting that humans may be more emotionally invested in AI interactions than previously thought.

The Cyberball Experiment

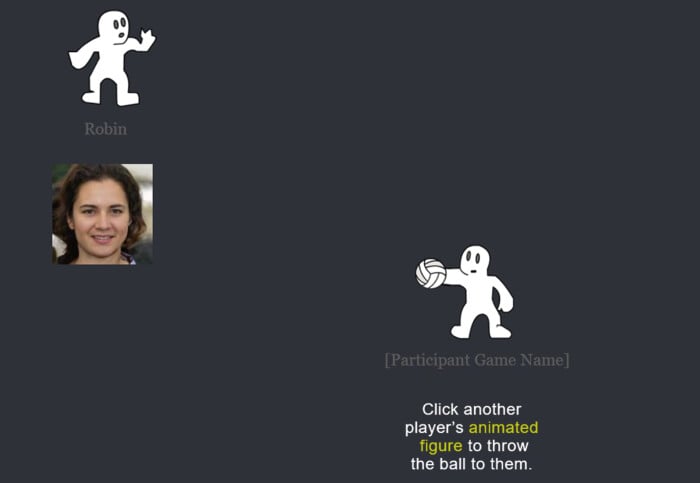

Researchers used a virtual ball game called “Cyberball” to observe how 244 human participants, aged 18 to 62, responded when they witnessed an AI bot being excluded from play by another human player. The game involves players passing a virtual ball to each other on-screen.

In some instances, the non-participant human threw the ball a fair number of times to the bot. In others, the human player blatantly excluded the bot by throwing the ball only to the participant. The researchers then observed and surveyed the participants to test whether they favored throwing the ball to the bot after it was treated unfairly, and why.

Lead author Jianan Zhou from Imperial’s Dyson School of Design Engineering explained the significance of the findings:

“This is a unique insight into how humans interact with AI, with exciting implications for their design and our psychology.”

Empathy for AI: A Human Trait?

The study’s results were striking. Most participants attempted to rectify the unfairness towards the bot by favoring throwing the ball to it when it had been excluded. This behavior mirrors how humans typically respond to ostracism among other humans, suggesting that people may instinctively treat AI agents as social beings.

Dr. Nejra van Zalk, senior author of the study, noted:

“Our results show that participants tended to treat AI virtual agents as social beings, because they tried to include them into the ball-tossing game if they felt the AI was being excluded. This is common in human-to-human interactions, and our participants showed the same tendency even though they knew they were tossing a ball to a virtual agent.”

Interestingly, this effect was more pronounced in older participants, indicating that age may play a role in how we perceive and interact with AI.

Implications for AI Design and Human-AI Interaction

The findings of this study have important implications for the design and implementation of AI agents. As AI becomes more prevalent in various aspects of our lives, from customer service chatbots to virtual assistants, understanding how humans perceive and interact with these agents is crucial.

The researchers suggest that developers should be cautious about designing AI agents to be overly human-like, as this could blur the lines between virtual and real interactions. This is particularly important in contexts where AI agents are used as companions or advisors on physical or mental health issues.

Jianan Zhou emphasized the need for tailored design approaches:

“By avoiding designing overly human-like agents, developers could help people distinguish between virtual and real interaction. They could also tailor their design for specific age ranges, for example, by accounting for how our varying human characteristics affect our perception.”

Future Research Directions

While the Cyberball experiment provides valuable insights, the researchers acknowledge that it may not fully represent how humans interact with AI in real-life scenarios. Typical interactions with AI often occur through written or spoken language with chatbots or voice assistants, which might elicit different responses.

To address this limitation, the team is now designing similar experiments using face-to-face conversations with agents in various contexts, such as laboratory settings and more casual environments. This approach will help test the extent to which their findings apply in more realistic scenarios.

As AI continues to evolve and integrate into our daily lives, studies like this one from Imperial College London provide crucial insights into the complex relationship between humans and artificial intelligence. By understanding how we perceive and interact with AI agents, we can better design and implement these technologies to serve human needs while maintaining clear boundaries between virtual and real-world interactions.

Quiz: Test Your Knowledge

- What game did the researchers use in their study?

- How did participants generally respond when they observed an AI bot being excluded?

- Which age group showed a stronger tendency to treat AI agents as social beings?

Answer Key:

- Cyberball

- They tried to include the AI bot by throwing the ball to it more frequently

- Older participants

Glossary of Terms

- AI virtual agents: Software systems that intelligently perform tasks on behalf of and at the request of humans

- Cyberball: A virtual ball-tossing game used in psychological experiments

- Ostracism: The act of excluding someone from a society or group

- Anthropomorphism: The attribution of human characteristics or behavior to non-human entities

- Human-AI interaction: The study of how humans engage and communicate with artificial intelligence systems

Enjoy this story? Get our newsletter! https://scienceblog.substack.com/