University of California, Berkeley, scientists have proved a fundamental relationship between energy and time that sets a “quantum speed limit” on processes ranging from quantum computing and tunneling to optical switching.

The energy-time uncertainty relationship is the flip side of the Heisenberg uncertainty principle, which sets limits on how precisely you can measure position and speed, and has been the bedrock of quantum mechanics for nearly 100 years. It has become so well-known that it has infected literature and popular culture with the idea that the act of observing affects what we observe.

Not long after German physicist Werner Heisenberg, one of the pioneers of quantum mechanics, proposed his relationship between position and speed, other scientists deduced that energy and time were related in a similar way, implying limits on the speed with which systems can jump from one energy state to another. The most common application of the energy-time uncertainty relationship has been in understanding the decay of excited states of atoms, where the minimum time it takes for an atom to jump to its ground state and emit light is related to the uncertainty of the energy of the excited state.

“This is the first time the energy-time uncertainty principle has been put on a rigorous basis – our arguments don’t appeal to experiment, but come directly from the structure of quantum mechanics,” said chemical physicist K. Birgitta Whaley, director of the Berkeley Quantum Information and Computation Center and a UC Berkeley professor of chemistry. “Before, the principle was just kind of thrown into the theory of quantum mechanics.”

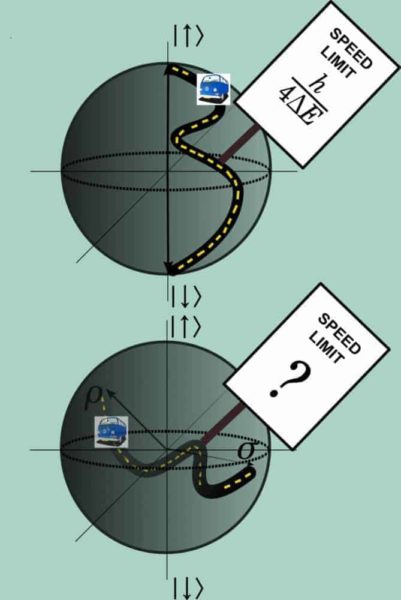

The new derivation of the energy-time uncertainty has application for any measurement involving time, she said, particularly in estimating the speed with which certain quantum processes – such as calculations in a quantum computer – will occur.

“The uncertainty principle really limits how precise your clocks can be,” said first author Ty Volkoff, a graduate student who just received his Ph.D. in chemistry from UC Berkeley. “In a quantum computer, it limits how fast you can go from one state to the other, so it puts limits on the clock speed of your computer.”

The new proof could even affect recent estimates of the computational power of the universe, which rely on the energy-time uncertainty principle.

Volkoff and Whaley included the derivation of the uncertainty principle in a larger paper devoted to a detailed analysis of distinguishable quantum states that appeared online Dec. 18 in the journal Physical Review A.

The problem of precision measurement

Heisenberg’s uncertainty principle, proposed in 1927, states that it’s impossible to measure precisely both the position and speed – or more properly, momentum – of an object. That is, the uncertainty in measurement of the position times the uncertainty in measurement of momentum will always be greater than or equal to Planck’s constant. Planck’s constant is an extremely small number (6.62606957 × 10-34 square meter-kilogram/second) that describes the graininess of space.

To physicists, an equally useful principle relates the uncertainties of measuring both time and energy: The variance of the energy of a quantum state times the lifetime of the state cannot be less than Planck’s constant.

“When students first learn about time-energy uncertainty, they learn about the lifetime of atomic states or emission line widths in spectroscopy, which are very physical but empirical notions,” Volkoff said.

This observed relationship was first addressed mathematically in a 1945 paper by two Russian physicists who dealt only with transitions between two obviously distinct energy states. The new analysis by Volkoff and Whaley applies to all types of experiments, including those in which the beginning and end states may not be entirely distinct. The analysis allows scientists to calculate how long it will take for such states to be distinguishable from one another at any level of certainty.

“In many experiments that examine the time evolution of a quantum state, the experimenters are dealing with endpoints where the states are not completely distinguishable,” Volkoff said. “But you couldn’t determine the minimum time that process would take from our current understanding of the energy-time uncertainty.”

Most experiments dealing with light, as in the fields of spectroscopy and quantum optics, involve states that are not entirely distinct, he said. These states evolve on time scales of the order of femtoseconds – millionths of a billionth of a second.

Alternatively, scientists working on quantum computers aim to establish entangled quantum states that evolve and perform a computation with speeds on the order of nanoseconds.

“Our analysis reveals that a minimal finite length of time must elapse in order to achieve a given success rate for distinguishing an initial quantum state from its time-evolved image using an optimal measurement,” Whaley said.

The new analysis could help determine the times required for quantum tunneling, such as the tunneling of electrons through the band-gap of a semiconductor or the tunneling of atoms in biological proteins.

It also could be useful in a new field called “weak measurement,” which involves tracking small changes in a quantum system, such as entangled qubits in a quantum computer, as the system evolves. No one measurement sees a state that is purely distinct from the previous state.