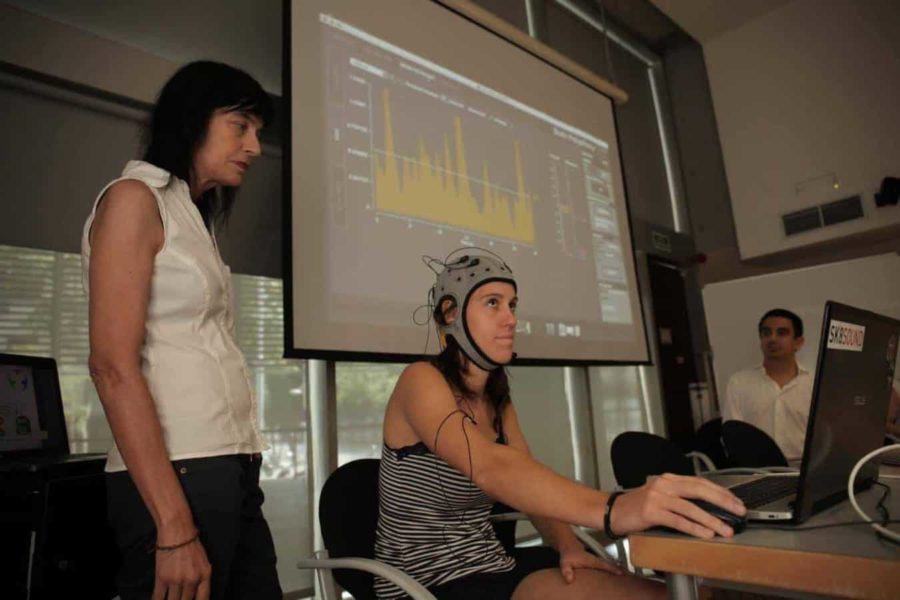

Scientists at the Centre for Genomic Regulation (CRG), the research company Starlab and the group BR::AC (Barcelona Research Art & Creation) of the University of Barcelona developed a device that produces sounds from brain signals. This highly interdisciplinary team is led by Mara Dierssen, head of the Cellular & Systems Neurobiology group at CRG. Its ultimate goal is to develop an alternative communication system for people with cerebral palsy to allow them to communicate–and more specifically in this pilot phase, to communicate their emotions. Scientists are carrying out the project with volunteers who are either healthy or who have physical and/or mental disabilities, working together with the association Pro-Personas con Discapacidades Físicas y Psíquicas (ASDI) from Sant Cugat del Vallès.

“At the neuroscientific level, our challenge with Brain Polyphony is to be able to correctly identify the EEG signals–that is, the brain activity–that correspond to certain emotions. The idea is to translate this activity into sound and then to use this system to allow people with disabilities to communicate with the people around them. This alternative communication system based on sonification could be useful not only for patient rehabilitation but also for additional applications, such as diagnosis,” stated Mara Dierssen. She added, “Of course, the technological and computational aspects are also challenging. We have to ensure that both the device and the software that translates the signals can give us robust and reproducible signals, so that we can provide this communication system to any patient.”

Currently, other signal transduction systems (using brain-computer interfaces) are undergoing testing for people with disabilities. However, most of these systems require a certain level of motor control, for example, by using eye movement. This represents a major constraint for people with cerebral palsy, who often suffer from spasticity or who are unable to control any aspect of their motor system, making it impossible for them to use these systems. A further limitation is that most of these other devices do not allow real-time analysis of the signals but rather require information post-processing. The proposal put forth by the Brain Polyphony researchers now allows real-time analysis, starting from the moment the user puts on the interface device.

The sound of our brain

Unlike other existing sonification systems using brain signals, Brain Polyphony allows us to directly “hear” brainwaves. “For the first time, we are using the actual sounds of brain waves. We assign octaves (as they are amplified) until we reach the range audible to the human ear, so that what we hear is really what is happening in our brain. The project aims to achieve this sound and to identify a recognizable pattern for each emotion that we can translate into code words. And all of this happens instantaneously in real-time,” explained David Ibáñez, researcher and project manager of Starlab.

Until now, the device has been tested mainly with healthy people, but the most recent tests with people with disabilities have been pleasantly surprising. The device was also presented at the 2015 Sónar festival in Barcelona, where it enhanced the artistic expression of the event by allowing users to “hear” the music created by their emotions. Along this line, Efraín Foglia, researcher at BR::AC, University of Barcelona, added: “The mere fact that we are able to hear our brains “talk” is a complex and interesting experience. With Brain Polyphony, we are able to hear the music that is broadcast directly from the brain. This is a new form of communication that will take on a unique dimension if it can also allow us to enable people with cerebral palsy to communicate.”

Bringing science to market, a challenge for research centres

This project not only exemplifies the importance of collaborations between scientific disciplines but also provides a success story in how to bring basic research to society. Brain Polyphony was the result of an internal initiative of the Center for Genomic Regulation (CRG), which seeks to promote multidisciplinary approaches and mainstreaming of basic research focused on patients and society, especially at an early stage. “We encourage our researchers at CRG to propose translational and collaborative projects that should involve clinics or other companies in the health sector groups. For that, we created an internal call to grant additional seed funding, to allow them to do risky and innovative projects and ideas. The idea is to encourage them to try an initial project that they can then use after a year to make the leap to applying for more ambitious and competitive funding. Brain Polyphony shows how important institutional support, like the CRG commitment, can be to such projects”, concluded Michela Bertero, responsible for the Scientific and International Affairs office at CRG.

Made a very important ( revolutionary and unpublished ) discovery – invention-the1.first practical device for reading human thoughts / human mind reading machine / Brain Computer Interface. Discovery is not published. I invite partnership. Thank you. Сурен Акопов. About the problem look for example in You Tube :

1.Jack Gallant, human mind reading machine;

2.John – Dylan Haynes, human mind reading machine;

3.Tom Mitchell and Marcel Just, human mind reading machine.