Among the most common tools in electrical engineering and computer science are rectangular grids of numbers known as matrices. The numbers in a matrix can represent data, and they can also represent mathematical equations. In many time-sensitive engineering applications, multiplying matrices can give quick but good approximations of much more complicated calculations.

Matrices arose originally as a way to describe systems of linear equations, a type of problem familiar to anyone who took grade-school algebra. “Linear” just means that the variables in the equations don’t have any exponents, so their graphs will always be straight lines.

The equation x – 2y = 0, for instance, has an infinite number of solutions for both x and y, which can be depicted as a straight line that passes through the points (0,0), (2,1), (4,2), and so on. But if you combine it with the equation x – y = 1, then there’s only one solution: x = 2 and y = 1. The point (2,1) is also where the graphs of the two equations intersect.

The matrix that depicts those two equations would be a two-by-two grid of numbers: The top row would be [1 -2], and the bottom row would be [1 -1], to correspond to the coefficients of the variables in the two equations.

In a range of applications from image processing to genetic analysis, computers are often called upon to solve systems of linear equations — usually with many more than two variables. Even more frequently, they’re called upon to multiply matrices.

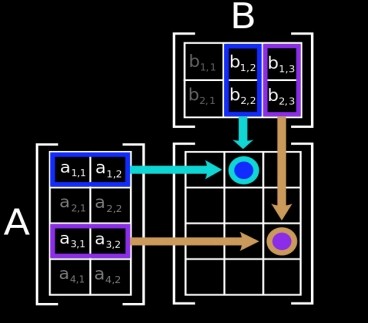

Matrix multiplication can be thought of as solving linear equations for particular variables. Suppose, for instance, that the expressions t + 2p + 3h; 4t + 5p + 6h; and 7t + 8p + 9h describe three different mathematical operations involving temperature, pressure, and humidity measurements. They could be represented as a matrix with three rows: [1 2 3], [4 5 6], and [7 8 9].

Now suppose that, at two different times, you take temperature, pressure, and humidity readings outside your home. Those readings could be represented as a matrix as well, with the first set of readings in one column and the second in the other. Multiplying these matrices together means matching up rows from the first matrix — the one describing the equations — and columns from the second — the one representing the measurements — multiplying the corresponding terms, adding them all up, and entering the results in a new matrix. The numbers in the final matrix might, for instance, predict the trajectory of a low-pressure system.

Of course, reducing the complex dynamics of weather-system models to a system of linear equations is itself a difficult task. But that points to one of the reasons that matrices are so common in computer science: They allow computers to, in effect, do a lot of the computational heavy lifting in advance. Creating a matrix that yields useful computational results may be difficult, but performing matrix multiplication generally isn’t.

One of the areas of computer science in which matrix multiplication is particularly useful is graphics, since a digital image is basically a matrix to begin with: The rows and columns of the matrix correspond to rows and columns of pixels, and the numerical entries correspond to the pixels’ color values. Decoding digital video, for instance, requires matrix multiplication; earlier this year, MIT researchers were able to build one of the first chips to implement the new high-efficiency video-coding standard for ultrahigh-definition TVs, in part because of patterns they discerned in the matrices it employs.

In the same way that matrix multiplication can help process digital video, it can help process digital sound. A digital audio signal is basically a sequence of numbers, representing the variation over time of the air pressure of an acoustic audio signal. Many techniques for filtering or compressing digital audio signals, such as the Fourier transform, rely on matrix multiplication.

In the same way that matrix multiplication can help process digital video, it can help process digital sound. A digital audio signal is basically a sequence of numbers, representing the variation over time of the air pressure of an acoustic audio signal. Many techniques for filtering or compressing digital audio signals, such as the Fourier transform, rely on matrix multiplication.

Another reason that matrices are so useful in computer science is that graphs are. In this context, a graph is a mathematical construct consisting of nodes, usually depicted as circles, and edges, usually depicted as lines between them. Network diagrams and family trees are familiar examples of graphs, but in computer science they’re used to represent everything from operations performed during the execution of a computer program to the relationships characteristic of logistics problems.

Every graph can be represented as a matrix, however, where each column and each row represents a node, and the value at their intersection represents the strength of the connection between them (which might frequently be zero). Often, the most efficient way to analyze graphs is to convert them to matrices first, and the solutions to problems involving graphs are frequently solutions to systems of linear equations.

Good and clear summary of practical Matrix applications indeed . We can also predict and calculate the `evolutionary relationships between species` , and build ` phylogenetic trees` . With different Morphological characteristics or DNA/molecular data , we are able to build “a relationship tree” an accurate representation of how these organisms are related and also “when” they separated from each other called MRCA “Most Recent Common Ancestor” . By simply listing them by Rows and Columns one after another creating “matrixes” .

In other words comparing Gene Domains (with different base pairs in similar ancestral DNA molecule ) OR Amino acid molecule differences of individual different but related proteins and comparing their relationships between different organisms and their Phylogenetic Trees and `node points` are all involved linear MATRIX calculations with computer programs ..

Also in medical and engineering research and Econometric time series analysis applications , `Multivariate analysis` are all basically mathematical linear Matrix calculations of different sets of variables calculated by computer programs .

In short MATRIX applications are seen almost in everything in all sciences without exception .

http://bcrc.bio.umass.edu/intro/content/phylogeneticsmorphology-protocol