Biologists at LMU have demonstrated that people can acquire the capacity for echolocation, although it does take time and work.

As blind people can testify, we humans can hear more than one might think. The blind learn to navigate using as guides the echoes of sounds they themselves make. This enables them to sense the locations of walls and corners, for instance: by tapping the ground with a stick or making clicking sounds with the tongue, and analyzing the echoes reflected from nearby surfaces, a blind person can map the relative positions of objects in the vicinity. LMU biologists led by Professor Lutz Wiegrebe of the Department of Neurobiology (Faculty of Biology) have now shown that sighted people can also learn to echolocate objects in space, as they report in the biology journal “Proceedings of the Royal Society B”.

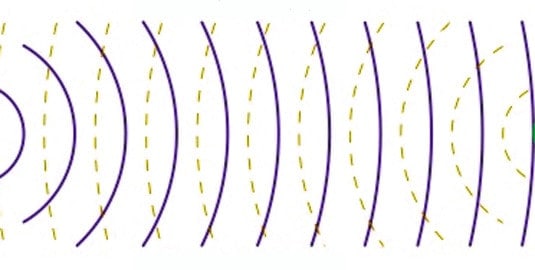

Wiegrebe and his team have developed a method for training people in the art of echolocation. With the help of a headset consisting of a microphone and a pair of earphones, experimental subjects can generate patterns of echoes that simulate acoustic reflections in a virtual space: the participants emit vocal clicks, which are picked up by the microphone and passed to a processor that calculates the echoes of a virtual space within milliseconds. The resulting echoes are then played back through the earphones. The trick is that the transformation applied to the input depends on the subject’s position in virtual space. So the subject can learn to associate the artificial “echoes” with the distribution of sound-reflecting surfaces in the simulated space.

A dormant skill

“After several weeks of training, the participants in the experiment were able to locate the sources of echoes pretty well. This shows that anyone can learn to analyze the echoes of acoustic signals to obtain information about the space around him. Sighted people have this ability too; they simply don’t need to use it in everyday situations,” says Lutz Wiegrebe. “Instead, the auditory system actively suppresses the perception of echoes, allowing us to focus on the primary acoustic signal, independently of how the space alters the signals on its way to the ears.” This makes it easier to distinguish between different sound sources, allowing us to concentrate on what someone is saying to us, for example. The new study shows, however, that it is possible to functionally invert this suppression of echoes, and learn to use the information they contain for echolocation instead.

In the absence of visual information, we and most other mammals find navigation difficult. So it is not surprising that evolution has endowed many mammalian species with the ability to “read” reflected sound waves. Bats and toothed whales, which orient themselves in space primarily by means of acoustic signals, are the best known.

Wiegrebe and his colleagues are now exploring how the coordination of self-motion and echolocation facilitates sonar-guided orientation and navigation in humans. (Royal Society Publishing 2013) LMU/nh

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!