In 1995, French fashion magazine editor Jean-Dominique Bauby suffered a seizure while driving a car, which left him with a condition known as locked-in syndrome, a neurological disease in which the patient is completely paralyzed and can only move muscles that control the eyes.

Bauby, who had signed a book contract shortly before his accident, wrote the memoir “The Diving Bell and the Butterfly” using a dictation system in which his speech therapist recited the alphabet and he would blink when she said the correct letter. They wrote the 130-page book one blink at a time.

Technology has come a long way since Bauby’s accident. Many individuals with severe motor impairments caused by locked-in syndrome, cerebral palsy, amyotrophic lateral sclerosis, or other conditions can communicate using computer interfaces where they select letters or words in an onscreen grid by activating a single switch, often by pressing a button, releasing a puff of air, or blinking.

But these row-column scanning systems are very rigid, and, similar to the technique used by Bauby’s speech therapist, they highlight each option one at a time, making them frustratingly slow for some users. And they are not suitable for tasks where options can’t be arranged in a grid, like drawing, browsing the web, or gaming.

A more flexible system being developed by researchers at MIT places individual selection indicators next to each option on a computer screen. The indicators can be placed anywhere — next to anything someone might click with a mouse — so a user does not need to cycle through a grid of choices to make selections. The system, called Nomon, incorporates probabilistic reasoning to learn how users make selections, and then adjusts the interface to improve their speed and accuracy.

Participants in a user study were able to type faster using Nomon than with a row-column scanning system. The users also performed better on a picture selection task, demonstrating how Nomon could be used for more than typing.

“It is so cool and exciting to be able to develop software that has the potential to really help people. Being able to find those signals and turn them into communication as we are used to it is a really interesting problem,” says senior author Tamara Broderick, an associate professor in the MIT Department of Electrical Engineering and Computer Science (EECS) and a member of the Laboratory for Information and Decision Systems and the Institute for Data, Systems, and Society.

Joining Broderick on the paper are lead author Nicholas Bonaker, an EECS graduate student; Emli-Mari Nel, head of innovation and machine learning at Averly and a visiting lecturer at the University of Witwatersrand in South Africa; and Keith Vertanen, an associate professor at Michigan Tech. The research is being presented at the ACM Conference on Human Factors in Computing Systems.

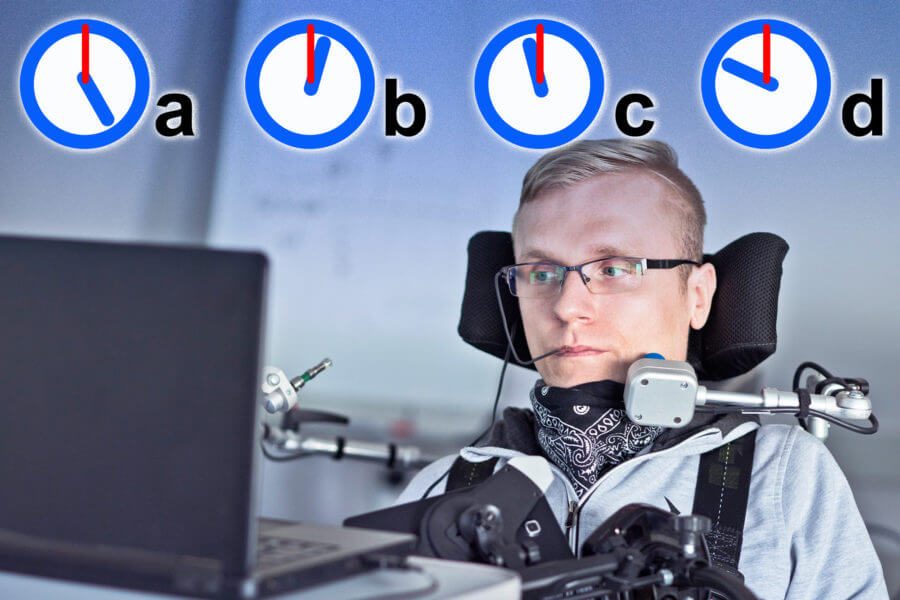

On the clock

In the Nomon interface, a small analog clock is placed next to every option the user can select. (A gnomon is the part of a sundial that casts a shadow.) The user looks at one option and then clicks their switch when that clock’s hand passes a red “noon” line. After each click, the system changes the phases of the clocks to separate the most probable next targets. The user clicks repeatedly until their target is selected.

When used as a keyboard, Nomon’s machine-learning algorithms try to guess the next word based on previous words and each new letter as the user makes selections.

Broderick developed a simplified version of Nomon several years ago but decided to revisit it to make the system easier for motor-impaired individuals to use. She enlisted the help of then-undergraduate Bonaker to redesign the interface.

They first consulted nonprofit organizations that work with motor-impaired individuals, as well as a motor-impaired switch user, to gather feedback on the Nomon design.

Then they designed a user study that would better represent the abilities of motor-impaired individuals. They wanted to make sure to thoroughly vet the system before using much of the valuable time of motor-impaired users, so they first tested on non-switch users, Broderick explains.

Switching up the switch

To gather more representative data, Bonaker devised a webcam-based switch that was harder to use than simply clicking a key. The non-switch users had to lean their bodies to one side of the screen and then back to the other side to register a click.

“And they have to do this at precisely the right time, so it really slows them down. We did some empirical studies which showed that they were much closer to the response times of motor-impaired individuals,” Broderick says.

They ran a 10-session user study with 13 non-switch participants and one single-switch user with an advanced form of spinal muscular dystrophy. In the first nine sessions, participants used Nomon and a row-column scanning interface for 20 minutes each to perform text entry, and in the 10th session they used the two systems for a picture selection task.

Non-switch users typed 15 percent faster using Nomon, while the motor-impaired user typed even faster than the non-switch users. When typing unfamiliar words, the users were 20 percent faster overall and made half as many errors. In their final session, they were able to complete the picture selection task 36 percent faster using Nomon.

“Nomon is much more forgiving than row-column scanning. With row-column scanning, even if you are just slightly off, now you’ve chosen B instead of A and that’s an error,” Broderick says.

Adapting to noisy clicks

With its probabilistic reasoning, Nomon incorporates everything it knows about where a user is likely to click to make the process faster, easier, and less error-prone. For instance, if the user selects “Q,” Nomon will make it as easy as possible for the user to select “U” next.

Nomon also learns how a user clicks. So, if the user always clicks a little after the clock’s hand strikes noon, the system adapts to that in real time. It also adapts to noisiness. If a user’s click is often off the mark, the system requires extra clicks to ensure accuracy.

This probabilistic reasoning makes Nomon powerful but also requires a higher click-load than row-column scanning systems. Clicking multiple times can be a trying task for severely motor-impaired users.

Broderick hopes to reduce the click-load by incorporating gaze tracking into Nomon, which would give the system more robust information about what a user might choose next based on which part of the screen they are looking at. The researchers also want to find a better way to automatically adjust the clock speeds to help users be more accurate and efficient.

They are working on a new series of studies in which they plan to partner with more motor-impaired users.

“So far, the feedback from motor-impaired users has been invaluable to us; we’re very grateful to the motor-impaired user who commented on our initial interface and the separate motor-impaired user who participated in our study. We’re currently extending our study to work with a bigger and more diverse group of our target population. With their help, we’re already making further improvements to our interface and working to better understand the performance of Nomon,” she says.

“Nonspeaking individuals with motor disabilities are currently not provided with efficient communication solutions for interacting with either speaking partners or computer systems. This ‘communication gap’ is a known unresolved problem in human-computer interaction, and so far there are no good solutions. This paper demonstrates that a highly creative approach underpinned by a statistical model can provide tangible performance gains to the users who need it the most: nonspeaking individuals reliant on a single switch to communicate,” says Per Ola Kristensson, professor of interactive systems engineering at Cambridge University, who was not involved with this research. “The paper also demonstrates the value of complementing insights from computational experiments with the involvement of end-users and other stakeholders in the design process. I find this a highly creative and important paper in an area where it is notoriously difficult to make significant progress.”

This research was supported, in part, by the Seth Teller Memorial Fund to Advanced Technology for People with Disabilities, a Peter J. Eloranta Summer Undergraduate Research Fellowship, the MIT Intelligence Quest, and the National Science Foundation.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!