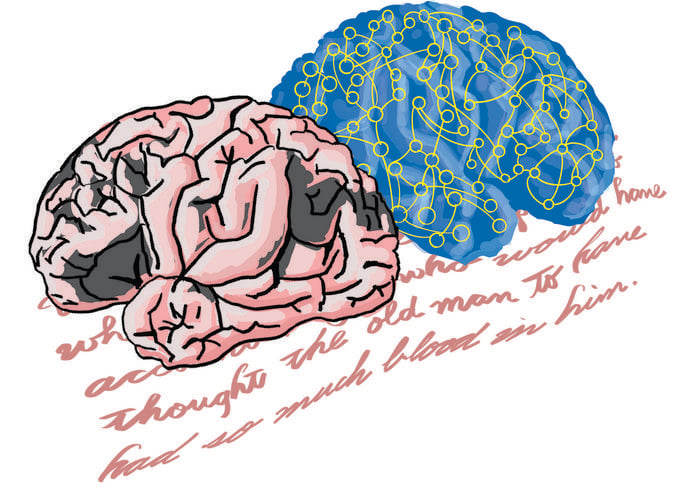

New research from the University of Tokyo reveals striking similarities between how large language models (LLMs) process information and brain activity patterns in people with certain types of aphasia. The study, published May 14 in Advanced Science, could lead to both improved AI systems and better diagnostic tools for language disorders.

Researchers used sophisticated “energy landscape analysis” to compare internal processing patterns in AI systems like ALBERT, GPT-2, and Llama with brain scans from people with various types of aphasia—language disorders typically caused by stroke or brain injury.

When Fluent Speech Lacks Accuracy

Many users of AI chatbots have noticed how these systems can produce confident, articulate responses that sound authoritative yet contain factual errors or fabricated information—a phenomenon researchers informally call “hallucinations.”

What caught the researchers’ attention was how this behavior parallels specific human language disorders, especially receptive aphasia.

“You can’t fail to notice how some AI systems can appear articulate while still producing often significant errors,” said Professor Takamitsu Watanabe from the International Research Center for Neurointelligence at the University of Tokyo. “But what struck my team and I was a similarity between this behavior and that of people with Wernicke’s aphasia, where such people speak fluently but don’t always make much sense.”

This observation led Watanabe and colleagues to investigate whether the internal mechanisms might share similarities at a deeper level.

Mapping Internal Dynamics

The researchers employed energy landscape analysis—a technique originally developed by physicists but adapted for neuroscience—to visualize how information flows through both AI systems and human brains.

Their analysis revealed that large language models exhibit patterns remarkably similar to those seen in people with receptive aphasia, particularly Wernicke’s aphasia. Both show what researchers call “polarized distributions” in how information transitions between different states.

To explain this complex concept, Watanabe offered an analogy: “You can imagine the energy landscape as a surface with a ball on it. When there’s a curve, the ball may roll down and come to rest, but when the curves are shallow, the ball may roll around chaotically,” he said. “In aphasia, the ball represents the person’s brain state. In LLMs, it represents the continuing signal pattern in the model based on its instructions and internal dataset.”

Key Findings from the Study

- Both LLMs and people with receptive aphasia showed “bimodal distributions” in transition frequency and dwelling time of network activity

- Four different LLMs (ALBERT, GPT-2, Llama-3.1, and a Japanese variant) all demonstrated patterns similar to receptive aphasia

- The abnormal increase in “Gini coefficients” found in receptive aphasia correlated with poor comprehension capability

- Different types of aphasia could be classified based on internal network dynamics

Implications for AI Development and Medical Diagnosis

This research opens fascinating possibilities in both AI development and medical diagnostics. For neuroscience, it suggests new methods for classifying language disorders based on internal brain activity rather than just external symptoms.

For AI developers, the findings could provide valuable insights into why language models sometimes generate inaccurate information despite appearing confident. Understanding these parallels may lead to more reliable AI systems.

How might this research improve our interaction with AI in the future? And could it help us better understand—and potentially treat—human language disorders?

The researchers caution against drawing too many direct comparisons between AI systems and human brain disorders. “We’re not saying chatbots have brain damage,” said Watanabe. “But they may be locked into a kind of rigid internal pattern that limits how flexibly they can draw on stored knowledge, just like in receptive aphasia. Whether future models can overcome this limitation remains to be seen, but understanding these internal parallels may be the first step toward smarter, more trustworthy AI too.”

Study Methods and Limitations

The study analyzed resting-state fMRI data from stroke patients with four different types of aphasia, comparing them with stroke patients without aphasia and healthy controls.

For the AI systems, the team examined internal network activity in several publicly available large language models after they had produced responses to inputs.

The researchers acknowledge limitations in their study, including the relatively small sample size for some aphasia types and the fact that they could only test smaller language models rather than the largest, most advanced systems like GPT-4.

Despite these limitations, the study presents a novel approach to understanding both human language disorders and artificial intelligence systems. As we increasingly rely on AI for information and assistance, understanding the similarities and differences between human and artificial cognition becomes ever more important.

The research was supported by multiple grants from the Japan Society for Promotion of Sciences, the University of Tokyo, and several other Japanese research institutions.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!