Science explains a lot! Many of us take as a matter of faith that science explains everything.

It’s not that old. It was only about 150 years ago that scientists adopted the hypothesis that:

Nature obeys fixed laws, exactly, no exceptions, and the laws are the same everywhere and for all time.

Within a few decades, this went from a bold land-grab by the scientists, to a litmus test for whether you really believe in science, to an assumption that everyone made, a kind of synthetic a priori that “must” be true for science to “work”. (Feynman put this particular bogie man to bed in his typically succinct and quotable style*.)

I call it the Zeroth Law of Science, but once it is stated explicitly, it becomes obvious that it is a statement about the way the world works, testable, as a good scientific hypothesis should be. We can ask, “Is it true?”, and we can design experiments to try to falsify it. (Yes, “falsification” is fundamental to the epistemology of experimental science; you can never prove a hypothesis, but you can try your darndest to prove it wrong, and if you fail repeatedly, the hypothesis starts to look pretty good, and we call it a “theory”.)

Well, the Zeroth Law only lasted a few decades before it was blatantly and shockingly falsified by quantum mechanics. The quantum world does not obey fixed laws, but behaves unpredictably. Place a piece of uranium next to a Geiger counter, and the timing of the clicks (that tell us that somewhere inside it an atom of uranium has turned to lead) appears not fixed, but completely random.

So the Zeroth Law was amended by the quantum gurus, Planck, Bohr, Schrödinger, Heisenberg, and Dirac:

The laws of physics at the most fundamental level are half completely fixed and determined, and half pure randomness. The fixed part is the same everywhere and for all time. The random part passes every mathematical test for randomness, and is in principle unpredictable, unrelated to anything, anywhere in the universe, at any time.

Einstein protested that the universe couldn’t be this ornery. “God doesn’t play dice.” Einstein wanted to restore the original Zeroth Law from the 19th Century. The common wisdom in science was that Einstein was wrong, and that remains the standard paradigm to this day.

If we dared to challenge the Zeroth Law with empirical tests, how would we do it? The Law as it now stands has two parts, and we might test each of them separately. For the first part, we would work with macroscopic systems where the quantum randomness is predicted to average itself out of the picture. We would arrange to repeat a simple experiment and see if we can fully account for the quantitative differences in results from one experiment to the next. For the second part, we would do the opposite—measure microscopic events at the level of the single quantum, trying to create patterns in experimental results that are predicted to be purely random.

Part I – Are the fixed laws really fixed?

First Part: In biology, this is very far from being true. I worked in a worm laboratory last year, participating in statistical analysis of thousands of protein abundances. The first question I asked was about repeatability. The experiment was done twice as a ‘biological replicate’. One week later, same lab, same person doing the experiment, same equipment, averaging over hundreds of worms, all of which are genetically identical. But the results were far from identical. The correlation between Week 1 and Week 2 was only R=0.4. The results were more different than they were the same. People who were more experienced than me told me this is the way it is with data from a bio lab. It is routine procedure to perform the experiment several times, then average the results, though they are very different.

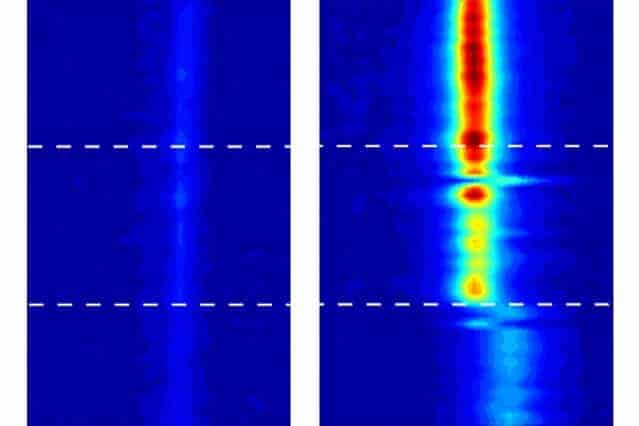

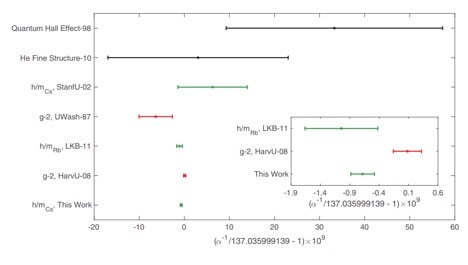

This is commonly explained by the fact that no two living things are the same, so it’s not really the same experimental condition, not at the level of atoms and molecules. Biology is a derived science. A better test would be to repeat a physics experiment. On the surface, everyone who does experiments in any science knows that the equipment is touchy, and it commonly takes several tries to “get it right”. It is routine to throw away many experimental trials for each one that we keep. This is explained as human error, and undoubtedly a great deal of it is human error, in too many diverse forms to catalogue. But were there some real issue with repeatability, it would be camouflaged by the human error all around, and we might never know. Measurement of fundamental constants is an area where physicists are motivated to repeat experiments in labs around the world and attempt to identify all sources of experimental error and quantify them. I believe it is routine for more discrepancies to appear than can be accounted for with the catalogued uncertainties. Below is an example where things work pretty well. The bars represent 7 independent measures of a fundamental constant of nature called the Fine Structure Constant, α ~ 1/137. The error bars are supposed to be such that ⅔ of the time the right answer is within the error bars, and 95% of the time the right answer is within a span of two error bars. The graphs don’t defy this prediction.

(The illustration is from Parker et al, 2018)

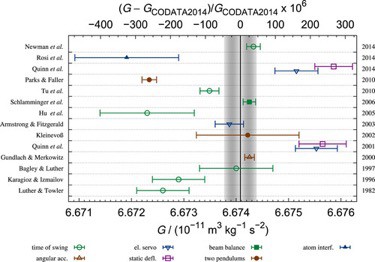

Here, in contrast, are measures of the gravitational constant.

(from Rothleitner & Schlamminger, 2017)

In the second diagram, the discrepancies are clearly not within expected limits. There are 14 measurements, and we would expect 10 of them to include the accepted value within their error bars, but only 2 actually do. We would expect 13 of 14 to include the accepted value within two error bar lengths, but only 8 of 14 do. Clearly, there are sources of error here that are unaccounted for, but in the culture of today’s science, no one would adduce this as evidence against the Zeroth Law.

The situation typified by the gravitational constant is much more common in science than the situation of the fine structure constant above. Still, I suggest that, in the current scientific climate, no amount of evidence of this kind will ever be considered sufficient to overturn the Zeroth Law. We are too accustomed to “unquantifiable uncertainties” and “unknown unknowns,” and no amount of variation when a given experiment is repeated exactly will ever suffice to convince a skeptic.

Part II – Is Quantum Randomness really random?

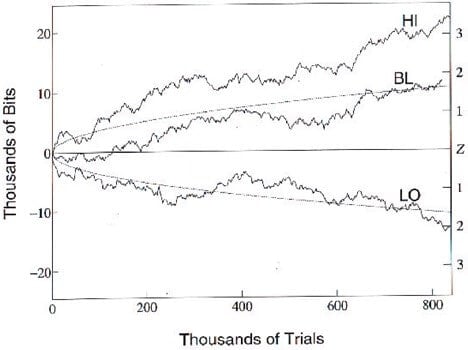

Here, there are real experiments that have been done to attempt to answer the question directly, and there is solid evidence that the answer is, “no”. From the 1970s through the 2000s, in the Princeton laboratory of Robert Jahn, experiments were performed with ordinary people trying to change the output of a quantum random event generator (REG). The effect was very small, but overwhelmingly significant in the aggregate. Here’s a write-up, and here’s a graph of Jahn’s results:

The top curve represents an average of random numbers when the human subject is “thinking high” and the bottom curve when the subject is “thinking low”. The most pointed way to present this data is as the difference between the two, which should be close to zero in the long run, but clearly departs more and more from zero over time, reaching a difference of 5 standard deviations. The probability that this could occur by chance is less than one in a million.

Jahn was Dean of Engineering at Princeton and a prominent researcher in aerospace engineering until his credibility was attacked for daring to ask questions that are considered out-of-bounds by conventional science. The take-down of Robert Jahn represented a shameful triumph of Scientism over the true spirit and methodology of science.

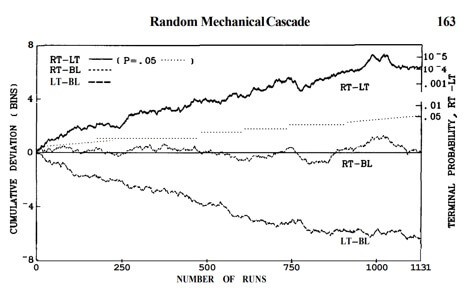

Another of Jahn’s experiments involved a big pinball machine mounted on the wall which dropped balls from the center, and let them bounce over an array of pegs, so they ended up usually in the center, but sometimes far to one side. The human subject would sit in front of the machine and “think right” or “think left”. Here’s a write-up on the subject, and here’s a graph of results.

In this case, the system was macroscopic, but so arranged as to amplify the “butterfly effect”. Very, very tiny differences in the first bounce can have macroscopic effects on the tenth bounce, and so it can be shown the system amplifies quantum randomness. The probability of the difference between the right and left curves occurring by chance is even smaller than the REG experiment, in the range of 1 in a billion.

Later, Dean Radin performed a completely different experiment based on the same idea of human intention influencing quantum events. Radin reports experimental results that are positive for people who have a meditation practice, but not significant for people who don’t meditate. Since this write-up, more positive results have been collected.

More amusing than informative is the legend about Wolfgang Pauli, one of the geniuses who laid the foundation for quantum physics and in particular the relationship between theory of the atom and chemical properties of the elements and their atomic bonds. The legend is that whenever Pauli walked into the room, experimental apparatus would stop working for no identifiable reason. This came to be called the Pauli Effect.

Significance?

If the effect of human intention on quantum random events is so small that it has to be measured thousands of times to be sure we are seeing it, does it have any practical significance in our world? I would answer a resounding YES, for three reasons.

First, there is a hypothesis that we have more influence when we care more. Human emotions and intention have a tiny effect on lab experiments, in which we have little stake for our lives and our destiny. But the positive results in principle leave open the possibility that we are profoundly (if unconsciously) influencing the events in our lives that mean most to us; and perhaps with meditation and focused intent we can consciously influence distant events. People who have thought more about this than I find evidence for a collective effect, in which many people meditating on the same intent can have dramatic effects.

Second, 90 years after quantum theory was first formulated, the physics community remains deeply divided over what it means. One school holds a place for consciousness in the fundamental workings of quantum physics. This is not currently the dominant interpretation, but it is the one advocated by Erwin Schrödinger himself, and it was attractive to several prominent physicists who followed him, especially de Broglie, Bohm and Wigner. The idea was expanded into three book-length treatments by Berkeley professor Henry Stapp. [Mindful Universe: Quantum Mechanics and the Participating Observer (2011), Mind, Matter and Quantum Mechanics (2013), Quantum Theory and Free Will (2017)] More accessible is my favorite book on the subject, Elemental Mind, by Nick Herbert.

Third, there is the link to quantum biology and the “hard problem” of metaphysics: What is the relationship between our conscious experience and activity of neurons in the brain? Quantum biology has firmly established a special role for quantum mechanics in some biological processes, including photosynthesis; beyond this, more radical proponents see quantum effects as essential to life. Johnjoe McFadden of Surrey University has put forth the hypothesis that consciousness is a driving force in evolution [his book]. Stuart Kauffman, a pioneer in the mathematical physics of chaos theory, has collected evidence for quantum criticality in our brains. I’ll take a couple of paragraphs to summarize this idea.

Human-designed machines are engineered to perform reliably. If we run a computer program twice, we don’t want it to turn up different answers. This must be true despite the fact that every transistor relies on quantum effects that are essentially stochastic. The trick used by electrical engineers is to make each transistor just large enough (many electrons involved with every switching event) such that quantum uncertainty almost never plays a role in the outcome. To make this quantitative: In today’s microprocessors, each transistor is just a few hundred atoms across, so it contains perhaps a million atoms or less in all. The computer on which I do evolutionary simulations runs at 3.5 GHz, meaning that there are several billion switch events each second. If one of my simulations runs for a few minutes there are more than a trillion events, and any one of them could change the outcome. So the fact that these simulations run reliably means that the probability of a transistor being influenced by quantum randomness is much less than 1 in a trillion.

Contrast this with the way our brains work. Neurotransmitters are molecules that flip between two conformations, two very different shapes, dependent on their chemical and electrical environments. Kaufman has shown that most such molecules are “designed” (meaning “evolved”) to be unreliable, in the sense that they jump with maximal ease between the two conformations, and they exist in the brain in a “superposition state”. This is quantum jargon for saying that the atoms are in two places at once, their state is a mixture of the two conformations in a way that makes no sense to our intuitions that are attuned to macroscopic reality. The point is that electrical engineers determine to make each tiny component of a computer as reliable as possible, but nature seems to have gone out of her way to make our brains out of components that are as unreliable as possible. Kauffman interprets this to suggest that free will is a phenomenon that exists outside the realm of quantum wave functions (perhaps in a dualistic Cartesian or Platonic world), and that the brain is evolved to amplify the subtle quantum effects where our intent is capable of influence, and thus to allow our consciousness to shape our thoughts and (through neurons) control our muscular movements. This is also the premise of Stapp’s books, mentioned above.

Technology

Machines work, by and large. We count on them as a matter of everyday experience. When we put a key in the ignition, we expect the car to start, and when we run a computer program twice, we don’t expect to get different answers. But this is weak evidence for the Zeroth Law. Machines are engineered for a level of reliability that serves a specific market, and in critical applications, they have redundancies built in to assure fail-safe performance. The existence of so many high-tech devices that generally work is the source of an intuitive faith in the Zeroth Law, but if we ask more carefully about the meaning of their reliability, we can only conclude that the world is generally governed by physical laws that work with good precision and reliability most of the time.

Macroscopic miracles

“Miracles” by definition are exceptions to physical law, the quintessential counter-examples to the Zeroth Law of Science.

Miracles in the Bible and in stories of Sufis and Yogis and mystics of the East are abundant. It is difficult to verify any one of them, but the persistence of so many stories over so many centuries might be taken as more than wishful thinking by fallible humans. In his recent book Real Magic, Dean Radin makes a strong case (in my opinion) that some of these reports are credible.

A concerted program to test the Zeroth Law

I would never dispute that science is enormously useful. Science has, far and away, more explanatory power than any system of thought that mankind has ever devised. I can say this and still ask, Does science explain everything? Or does scientific law admit of exceptions? If we determine that there are exceptions, then we are moved to ask the next question, Can scientific methodology be expanded to encompass the exceptions? Or will the whole Scientific Project be subsumed in something larger and more broad-minded, in which experimental measurement and mathematical reasoning are two powerful ways of knowing about the world, but not the only ways.

A scientific program to validate or to falsify (or to reformulate) the Zeroth Law is perfectly feasible. It would require modest resources, in the context of today’s Big Science. It would be humbling and instructive, and would certainly invite a level of discussion that is overdue, and might prove extremely fertile.

The Zeroth Law of Science is fundamental to our world view, not just as scientists but as people. It affects our concept of life and our place in the universe and what (if anything) we might expect after death. It impacts our tolerance for non-scientific views of the world, and it touches on questions about the limits of what we know now, and what we can know in the future. In this time when the world is so terrifyingly poised on the brink of eco-suicide or thermonuclear disaster or political or social chaos, we may feel that we need a miracle to carry us past the crisis to a saner world. In the words of Charles Eisenstein, “A miracle is something that is impossible from one’s current understanding of reality and truth, but that becomes possible from a new understanding.”

________________

* Quote from Richard Feynman: “Philosophers have said that if the same circumstances don’t always produce the same results, predictions are impossible and science will collapse. Here is a circumstance that produces different results: identical photons are coming down in the same direction to the same piece of glass. We cannot predict whether a given photon will arrive at A or B. All we can predict is that out of 100 photons that come down, an average of 4 will be reflected by the front surface. Does this mean that physics, a science of great exactitude, has been reduced to calculating only the probability of an event, and not predicting exactly what will happen? Yes. That’s a retreat, but that’s the way it is: Nature permits us to calculate only probabilities. Yet science has not collapsed.”