Previous definitions of “well-being,” limited to taking a brisk walk and eating a few more vegetables, feel in many ways like a distant past. Shiny watches and sleek rings now measure how we eat, sleep, and breathe, calling on a combination of motion sensors and microprocessors to crunch bytes and bits.

Even with today’s variety of smart jewelry, clothing, and temporary tattoos that feel equal parts complex and manageable, scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Massachusetts General Hospital’s (MGH) Center for Artificial Intelligence (CPAI) wanted to make things a little more personal. They created a toolkit for designing health- and motion-sensing devices using something called “electrical impedance tomography (EIT),” a fancy word for an imaging technique that measures and visualizes a person’s internal conductivity. (EIT is typically used for things like observing lung function or detecting cancer.)

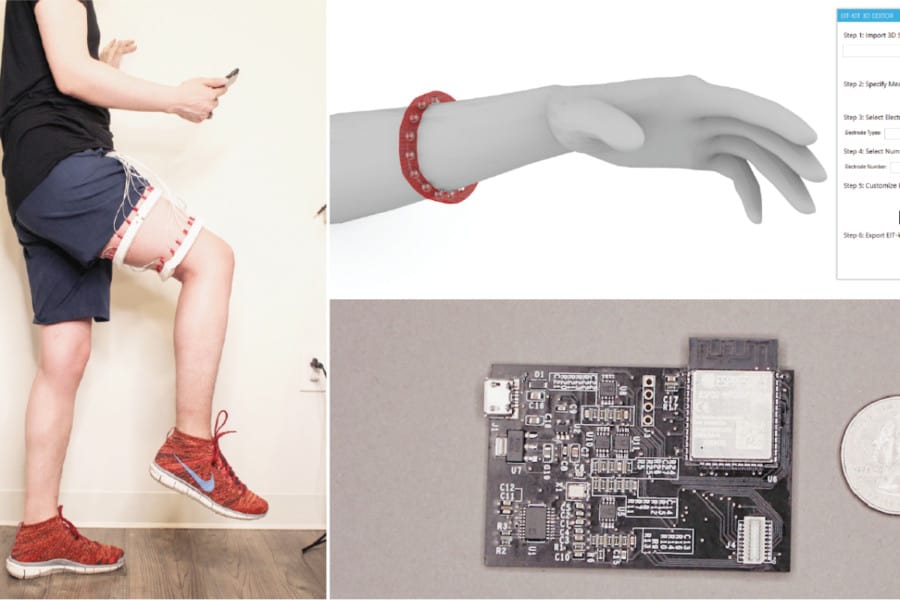

Using “EIT-kit,” the team built a range of devices that support different sensing applications, including a personal muscle monitor for physical rehabilitation, a wearable hand gesture recognizer, and a “bracelet” that can detect distracted driving.

While EIT sensing usually requires expensive hardware setups and complicated image reconstruction algorithms, the use of printed electronics and open-source EIT image libraries has made it an attractive, low-cost, and portable option. But designing EIT items is still tough, and usually requires a proper fusion of design knowledge, adequate contact between the device and the human, and optimization.

The EIT-kit 3D editor puts the user in the driver’s seat for full design direction. Once the sensing electrodes (which measure human activity) are put on the device in the editor, it can be exported to a 3D printer. The item can then be assembled, placed onto the target measuring area, and connected to EIT-kit’s sensing motherboard. As a final step, an on-board microcontroller library automates the electrical impedance measurement, and lets users see the visual measured data, even on a mobile phone.

Existing devices can also only sense motion, limiting users to understanding only how they change positions over time — but EIT-kit can sense actual muscle activity. One device prototyped by the team, which looks like two simple bands, sensed muscle strain and tension in the thigh to monitor muscle recovery post-injury, and may even possibly be used to prevent reinjury. The muscle monitor here used two electrode arrays to create a 3D image of the thigh, as well as augmented reality to view the muscle activity in real-time. In this case, just sensing motion would be useless, since a person doing a rehabilitation exercise correctly requires using the correct muscle. In addition, the researchers sensed biological tissue via an EIT device that analyzed the tenderness of raw meat.

“The EIT-kit project fits my long-term vision of creating personal health-sensing devices with rapid function prototyping techniques and novel sensing technologies,” says MIT CSAIL PhD student Junyi Zhu, the lead author on a new paper about EIT-kit. “During our study alongside medical professionals, we discovered that EIT sensing is largely patient- and sensing-location dependent, because of measuring configurations, signal calibration, electrode placements, and other bioelectrical-related factors. These challenges can be resolved with customizable hardware and automation algorithms. Beyond EIT, other health sensing technologies face similar complexities and personalized needs.”

The team is currently collaborating with MGH to develop EIT-kit for creating remote rehabilitation devices to monitor different parts of a patient’s body while healing. Since all EIT-kit devices are mobile and customized to a patient’s body form and particular injury, they can be easily used at home to give doctors a more holistic picture of the healing process.

Zhu wrote the paper alongside MIT undergraduate Jackson Snowden, alumnii Joshua Verdejo ’21, MNG ’21 and Emily Chen ’21, CSAIL PhD student Paul Zhang, co-director of CPAI at MGH and Harvard Medical School instructor Hamid Ghaednia, chief of spine surgery at MGH and associate professor at Harvard Medical School Joseph H. Schwab, and MIT Associate Professor Stefanie Mueller.

This material is based upon work supported by the National Science Foundation. The project was completed in collaboration with Schwab and Ghaednia. It will be presented at the ACM Symposium on User Interface Software and Technology (UIST) 2021 next month.