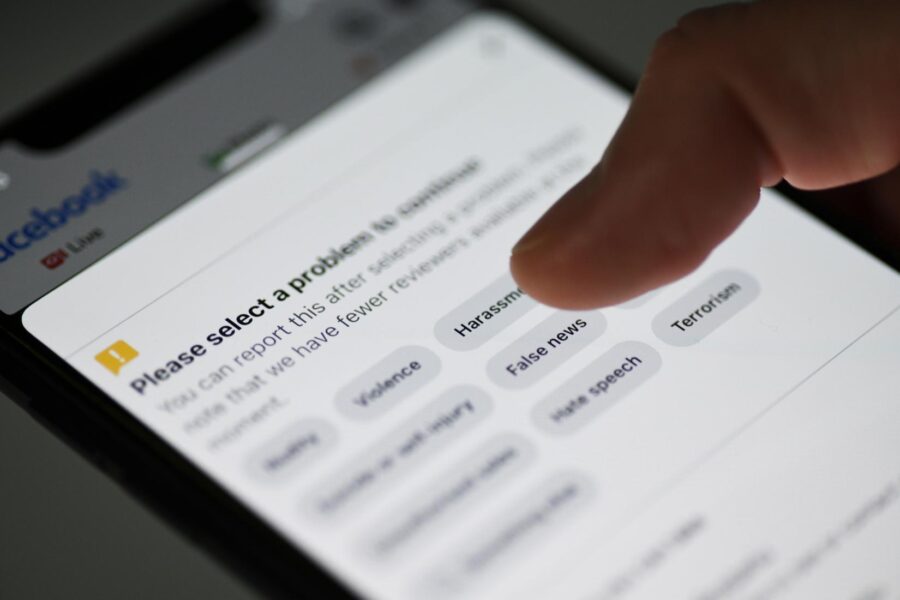

A recent study by researchers from the University of Technology Sydney and the University of Sydney has revealed that Meta’s content moderation policies on Facebook during the COVID-19 pandemic were not as effective as intended in controlling the spread of misinformation. The study, published in the journal Media International Australia (MIA), analyzed the effectiveness of strategies like content labelling and shadowbanning between 2020 and 2021.

Shadowbanning involves the algorithmic reduction of problematic content in users’ newsfeeds, search results, and recommendations. However, the study found that some far-right and anti-vaccination accounts experienced increased engagement and followers after Meta announced its content policy changes.

“This calls in question just how serious Meta has been about removing harmful content,” said Associate Professor Amelia Johns, the lead author of the study.

The research suggests that users dedicated to spreading misinformation were not deterred by Meta’s policies to suppress rather than remove dangerous content during the pandemic. Instead, they employed tactics to overcome platform interventions and game the algorithm.

“It was clear far-right and anti-vaccination communities were not deterred by Meta’s policies to suppress rather than remove dangerous misinformation during the pandemic, employing tactics that disproved Meta’s internal modelling,” Associate Professor Johns added.

The study highlights that the success of Meta’s policy to suppress rather than remove misinformation is inconsistent and may not adequately protect susceptible communities and users from encountering misinformation.

The findings raise concerns about the effectiveness of Meta’s content moderation strategies and suggest that more robust measures may be necessary to combat the spread of harmful misinformation on social media platforms like Facebook.

The paper, Labelling, shadow bans and community resistance: did Meta’s strategy to suppress rather than remove COVID misinformation and conspiracy theory on Facebook slow the spread? is an open access publication on the Sage Journals website.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!