Researchers at the University of Virginia have developed artificial compound eyes inspired by praying mantis vision, potentially revolutionizing how machines perceive and interact with the world. This innovation could address critical limitations in current machine vision systems, including accuracy issues and processing delays.

Biomimetic Design: Learning from Nature’s Engineers

The team’s artificial eyes replicate the unique visual capabilities of praying mantises, which stand out among insects for their exceptional depth perception. Unlike most insects with compound eyes that excel at motion tracking but struggle with depth, mantises have overlapping fields of view between their left and right eyes. This overlap creates binocular vision, allowing them to perceive depth in 3D space.

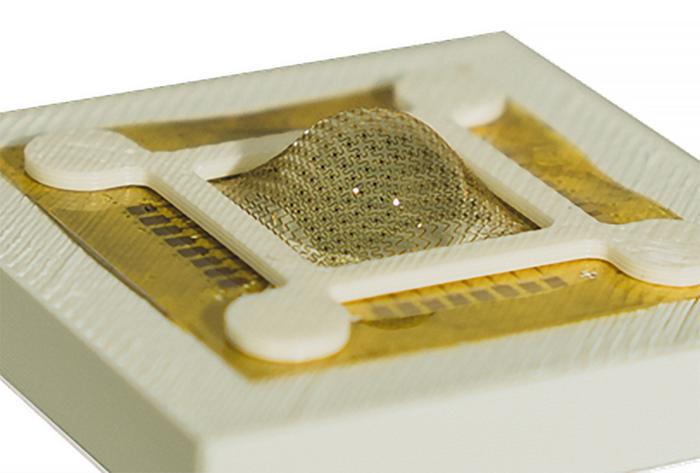

To mimic this natural design, the researchers integrated microlenses and multiple photodiodes using flexible semiconductor materials. This approach allowed them to recreate the convex shapes and faceted positions found in mantis eyes.

Byungjoon Bae, the study’s lead author, explained: “Making the sensor in hemispherical geometry while maintaining its functionality is a state-of-the-art achievement, providing a wide field of view and superior depth perception.”

This biomimetic design offers significant advantages over traditional machine vision systems. The prototype demonstrated potential power consumption reduction of over 400 times compared to conventional visual systems. This efficiency could be a game-changer for applications ranging from self-driving vehicles to smart home devices.

Edge Computing: Processing at the Source

A key innovation in this research is the integration of “edge” computing – processing data directly at or near the sensors that capture it. This approach allows the system to process visual information in real-time, dramatically reducing data transfer and computation costs.

The sensor array continuously monitors changes in the scene, identifying which pixels have changed and encoding this information into smaller data sets for processing. This method mirrors how insects perceive the world, differentiating pixels between scenes to understand motion and spatial data.

Dr. Kyusang Lee, the project’s supervisor, highlighted the significance of this approach: “The seamless fusion of these advanced materials and algorithms enables real-time, efficient and accurate 3D spatiotemporal perception.”

Why It Matters:

This research could have far-reaching implications for various technologies that rely on visual perception:

1. Improved safety for self-driving vehicles by enhancing depth perception and object recognition.

2. More efficient and accurate drones for various applications, from delivery services to search and rescue operations.

3. Enhanced robotic systems for manufacturing and assembly lines, increasing precision and reducing errors.

4. Advanced surveillance and security systems with better spatial awareness and real-time processing.

5. Smarter home devices with improved environmental understanding and interaction capabilities.

The combination of biomimetic design and edge computing addresses several key challenges in machine vision. By processing data at the source, these artificial eyes can reduce latency, improve energy efficiency, and enhance privacy by minimizing data transfer.

However, questions remain about the scalability and durability of these systems in real-world applications. Future research will likely focus on testing these artificial eyes in various environments and conditions to ensure their reliability and effectiveness.

As machine vision continues to play an increasingly important role in our daily lives, innovations like this bring us closer to a future where machines can perceive and interact with the world more like humans do. The potential applications of this technology extend far beyond current use cases, potentially opening new frontiers in fields such as augmented reality, robotics, and environmental monitoring.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!