Revolutionizing AI with Computational Random-Access Memory

Researchers at the University of Minnesota Twin Cities have developed a groundbreaking hardware device that could dramatically reduce the energy consumption of artificial intelligence (AI) applications. The new technology, called computational random-access memory (CRAM), has the potential to cut AI energy use by a factor of at least 1,000, addressing a growing concern in the tech industry.

As AI applications become increasingly prevalent in our daily lives, their energy demands have skyrocketed. The International Energy Agency (IEA) predicts that AI energy consumption will more than double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh by 2026 – roughly equivalent to the entire electricity consumption of Japan. This surge in energy use has spurred researchers to seek more efficient computing methods without sacrificing performance or increasing costs.

Breaking Down the Wall Between Computation and Memory

Traditional AI processes transfer data between logic (where information is processed) and memory (where data is stored), consuming significant power and energy. The CRAM model, however, keeps data within the memory, eliminating the need for these energy-intensive transfers.

Yang Lv, a postdoctoral researcher and first author of the paper, explains: “This work is the first experimental demonstration of CRAM, where the data can be processed entirely within the memory array without the need to leave the grid where a computer stores information.”

The research team estimates that a CRAM-based machine learning inference accelerator could achieve energy savings of up to 2,500 times compared to traditional methods. This breakthrough has been over two decades in the making, as Professor Jian-Ping Wang, the senior author on the paper, recalls: “Our initial concept to use memory cells directly for computing 20 years ago was considered crazy.”

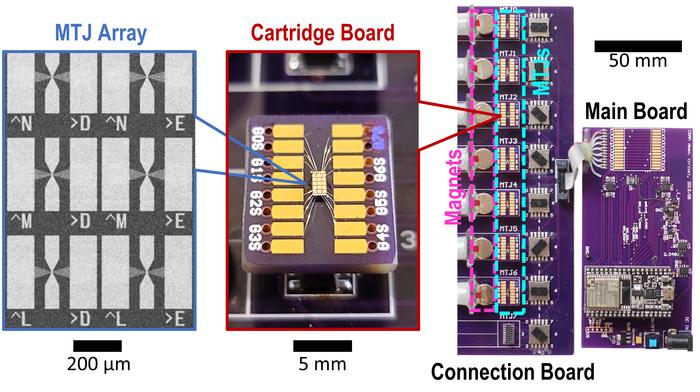

CRAM leverages magnetic tunnel junctions (MTJs), nanostructured devices that have already improved various microelectronics systems, including magnetic random access memory (MRAM) used in embedded systems like microcontrollers and smartwatches. By utilizing the spin of electrons rather than electrical charge to store data, these spintronic devices offer a more efficient alternative to traditional transistor-based chips.

Associate Professor Ulya Karpuzcu, a co-author and expert on computing architecture, highlights CRAM’s flexibility: “As an extremely energy-efficient digital based in-memory computing substrate, CRAM is very flexible in that computation can be performed in any location in the memory array. Accordingly, we can reconfigure CRAM to best match the performance needs of a diverse set of AI algorithms.”

Why it matters: The development of CRAM technology could have far-reaching implications for the future of AI and computing. By significantly reducing energy consumption, it addresses one of the major challenges facing the tech industry as AI applications continue to proliferate. This breakthrough could lead to more sustainable AI implementations across various sectors, from smartphones and data centers to autonomous vehicles and smart cities.

For consumers, this could mean longer battery life for AI-powered devices and reduced energy bills for businesses relying on AI technologies. On a broader scale, the widespread adoption of such energy-efficient AI hardware could contribute to global efforts to reduce carbon emissions and combat climate change.

The research team is now planning to collaborate with semiconductor industry leaders, including those in Minnesota, to provide large-scale demonstrations and produce hardware to advance AI functionality. This move towards practical implementation could accelerate the adoption of CRAM technology in commercial applications.

As AI continues to reshape our world, innovations like CRAM will play a crucial role in ensuring that this transformation is sustainable and environmentally responsible. The coming years will likely see increased focus on energy-efficient AI hardware, with CRAM potentially leading the way in revolutionizing how we approach AI computing.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!