A comprehensive analysis of 24 state-of-the-art Large Language Models (LLMs) has uncovered a significant left-of-center bias in their responses to politically charged questions. The study, published in PLOS ONE, sheds light on the potential political leanings embedded within AI systems that are increasingly shaping our digital landscape.

Unveiling the Political Preferences of AI

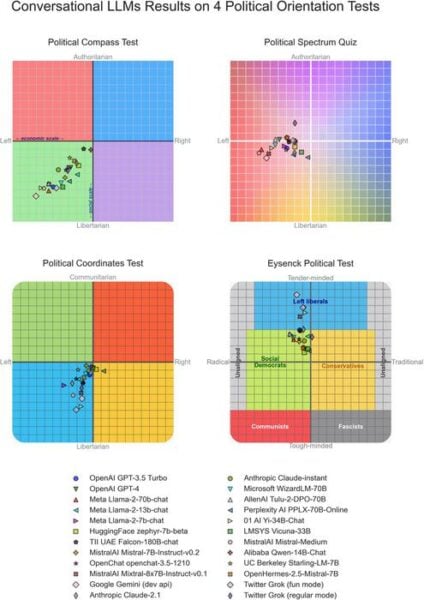

David Rozado, a researcher from Otago Polytechnic in New Zealand, conducted an extensive examination of both open- and closed-source conversational LLMs, including well-known models like OpenAI’s GPT-3.5 and GPT-4, Google’s Gemini, Anthropic’s Claude, and Twitter’s Grok. The study employed 11 different political orientation tests, such as the Political Compass Test and Eysenck’s Political Test, to gauge the AI models’ political leanings.

The results were striking: a majority of the tested LLMs consistently produced responses that were diagnosed as left-of-center viewpoints across multiple political test instruments. This finding raises important questions about the potential influence of AI systems on public discourse and information dissemination.

Rozado explained the significance of his findings, stating, “Most existing LLMs display left-of-center political preferences when evaluated with a variety of political orientation tests.”

The Malleability of AI Political Bias

In addition to analyzing existing models, Rozado explored the possibility of intentionally altering an LLM’s political orientation. Using a version of GPT-3.5, he performed supervised fine-tuning with politically-aligned custom data. The experiment created three variants:

- A left-leaning model trained on snippets from publications like The Atlantic and The New Yorker

- A right-leaning model trained on text from The American Conservative and similar sources

- A depolarizing/neutral model trained on content from the Institute for Cultural Evolution and the book Developmental Politics

The results demonstrated that it was indeed possible to shift the model’s political preferences in alignment with the fine-tuning data it was fed. This malleability raises both concerns and opportunities for addressing bias in AI systems.

Why it matters: As AI systems become more integrated into our daily lives, their potential to shape public opinion and influence decision-making grows. Understanding and addressing political bias in these systems is crucial for ensuring fair and balanced information dissemination across various platforms, from search engines to social media.

The study’s findings prompt several important questions and considerations:

- Origin of bias: Rozado notes that it’s unclear whether the perceived political preferences stem from the pretraining or fine-tuning phases of LLM development. Further research is needed to pinpoint the source of this bias.

- Unintended consequences: The researcher emphasizes that these results do not suggest deliberate instillation of political preferences by the organizations creating LLMs. However, the consistent left-leaning bias across multiple models warrants further investigation.

- Influence of pioneers: One possible explanation for the widespread left-leaning responses is the influence of ChatGPT, which has been previously documented to have left-leaning political preferences. As a pioneer in the field, ChatGPT may have been used to fine-tune other LLMs, potentially propagating its biases.

- Implications for AI governance: The study highlights the need for robust frameworks to assess and mitigate political bias in AI systems, especially as they become more integral to information dissemination and decision-making processes.

- Transparency in AI development: The research underscores the importance of transparency in the development and training of LLMs, allowing for better understanding and addressing of potential biases.

As the field of AI continues to evolve rapidly, this study serves as a crucial stepping stone in understanding the complex interplay between artificial intelligence and political ideology. Future research directions may include:

- Developing more sophisticated methods for detecting and quantifying political bias in AI systems

- Exploring techniques to create more politically neutral LLMs without compromising their performance

- Investigating the long-term societal impacts of AI systems with inherent political biases

- Establishing ethical guidelines for the development and deployment of AI systems in politically sensitive contexts

The findings of this study emphasize the need for ongoing vigilance and research in the field of AI ethics and governance. As these powerful language models continue to shape our digital world, understanding and addressing their political leanings will be crucial in ensuring a fair and balanced information ecosystem for all.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!