Language AI models achieved 81% accuracy in predicting neuroscience research outcomes, significantly surpassing human experts’ 63% success rate in a first-of-its-kind study that points to AI’s potential for accelerating scientific discovery.

Published in Nature Human Behaviour | Estimated reading time: 3 minutes

The vast scale of modern scientific literature has created a paradox: the wealth of available research has become so overwhelming that crucial insights often go unnoticed. Now, researchers at UCL have demonstrated that artificial intelligence can help solve this problem by identifying patterns across thousands of studies to predict research outcomes more accurately than human experts.

Led by Dr. Ken Luo of UCL’s Psychology & Language Sciences department, the research team developed BrainBench, a tool for evaluating how well large language models (LLMs) can forecast neuroscience results. When tested against 171 neuroscience experts, every AI model outperformed the human specialists, even those with the highest domain expertise.

The team’s specialized model, BrainGPT, achieved an even more impressive 86% accuracy after being trained specifically on neuroscience literature. Notably, like human experts, the AI models showed higher accuracy when they expressed greater confidence in their predictions.

“Scientific progress often relies on trial and error, but each meticulous experiment demands time and resources,” explains Dr. Luo. “Even the most skilled researchers may overlook critical insights from the literature.” This technology could help researchers design more effective experiments and make more informed decisions about their research direction.

Professor Bradley Love, senior author from UCL, suggests these findings reveal something deeper about the nature of scientific research: “This success suggests that a great deal of science is not truly novel, but conforms to existing patterns of results in the literature. We wonder whether scientists are being sufficiently innovative and exploratory.”

Glossary

- Large Language Models (LLMs)

- AI systems trained on vast amounts of text that can process and generate human-like language

- BrainBench

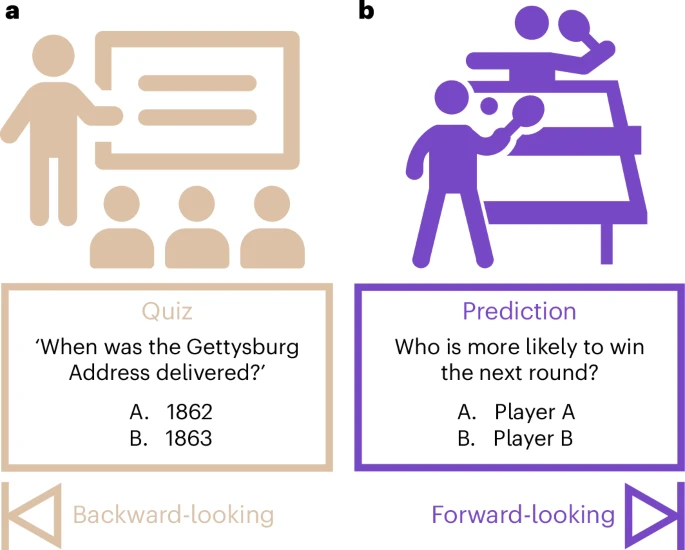

- A testing tool that compares real neuroscience study results with plausible but incorrect alternatives

- Perplexity Score

- A measure of how surprising or unexpected an AI model finds a particular piece of text based on its training

Test Your Knowledge

What was the accuracy rate of the general AI models in predicting study results?

The general AI models achieved 81% accuracy.

How much better did human experts perform when only considering their specific area of expertise?

Even in their specific domains, experts only achieved 66% accuracy, still well below the AI models.

How did the specialized BrainGPT model’s performance compare to its general-purpose version?

BrainGPT achieved 86% accuracy compared to the general-purpose Mistral’s 83%, showing a 3% improvement through specialized training.

What does the AI’s success suggest about current scientific research, according to Professor Love?

The high prediction accuracy suggests much scientific research follows existing patterns rather than being truly novel, raising questions about whether scientists are being sufficiently innovative in their approach.

Further Information

- AI’s Role in Scientific Research: This article discusses how artificial intelligence, particularly large language models, is transforming scientific research methodologies and predictions.

- Predicting Scientific Outcomes with AI: A detailed overview of how AI tools can enhance the accuracy of predicting scientific results, including insights from recent studies.

- The Future of AI in Neuroscience: An exploration of the implications of AI advancements in neuroscience research and potential future applications.

Enjoy this story? Subscribe to our newsletter at scienceblog.substack.com.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!