The prognosis for a patient with breast cancer is largely determined by the stage at which the diagnosis was made.

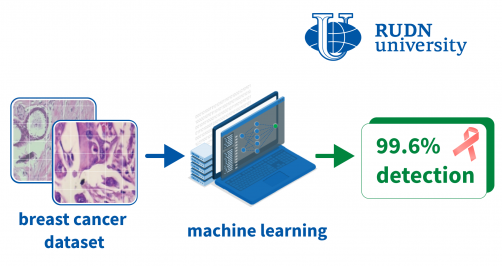

Histological examination is considered the gold standard for diagnosis. However, the histology result is influenced by subjective factors, as well as the quality of the sample. Inaccuracies lead to an erroneous diagnosis. A RUDN mathematician and colleagues from China and Saudi Arabia have developed a machine learning model that will help more accurately recognize cancer in histological images. Thanks to an additional module that improved the “attention” of the neural network, accuracy reached almost 100%.

“Computer classification of histological images will reduce the burden on doctors and increase the accuracy of tests. Such technologies will improve the treatment and diagnosis of breast cancer. Deep learning methods have shown promising results in medical image analysis problems in recent years,” Ammar Muthanna, Ph.D., Director of the Scientific Center for Modeling Wireless 5G Networks at RUDN University said.

The mathematicians tested several convolutional neural networks, which they supplemented with two convolutional attention modules – additional modules that are needed to detect objects in images. The model was trained and tested on the BreakHis data set. It consists of almost 10 thousand histological images of different scales obtained from 82 patients.

The best results were shown by a model composed of the DenseNet211 convolutional network with attention modules. The accuracy of this model reached 99.6%. Mathematicians have noticed that the recognition of cancerous formations is influenced by scale. The fact is that at different levels of zoom, the images appear to be of different quality, and the cancerous objects themselves are visible in different ways. Therefore, in a real application scenario, a suitable approximation will need to be considered.

“The attention modules in the model improved feature extraction and the overall performance of the model. With their help, the model focused on significant areas of the image and highlighted the necessary information. It shows the importance of attention mechanisms in the analysis of medical images,” Ammar Muthanna, Ph.D., Director of the Scientific Center for Modeling Wireless 5G Networks at RUDN University said.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!